Core Machine (Operating system/System info/Roon build number)

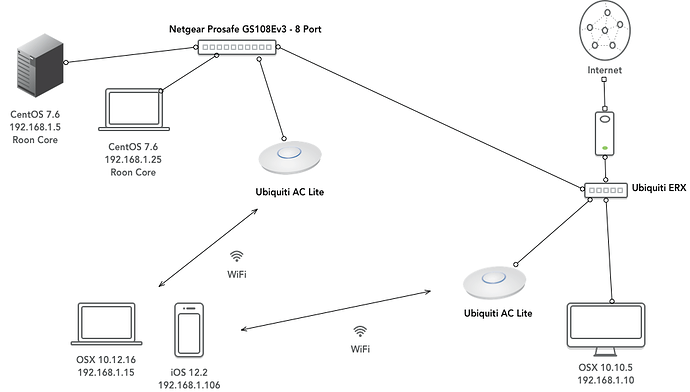

Roon 1.6 (416) on CentOS 7.6 (192.168.1.5)

Network Details (Including networking gear model/manufacturer and if on WiFi/Ethernet)

2x Unifi AP AC-Lite wifi (all devices on same SSID)

1x Netgear Prosafe switch

OK: 192.168.1.15 (control, OSX10.12.6) -> wifi -> 192.168.1.5 (core)

OK: 192.168.1.106 (control, iOS12.2) -> wifi -> 192.168.1.5 (core)

NOT OK: 192.168.1.10 (control, OSX10.10.5) -> netgear prosafe -> 192.168.1.5 (core)

SOMETIMES OK: 192.168.1.14 (control, OSX10.14.4) -> wifi -> 192.168.1.5 (core)

Audio Devices (Specify what device you’re using and its connection type - USB/HDMI/etc.)

- KEF LSX wifi

- Grace Mondo+ wifi

- Squeezebox Radio wifi

Description Of Issue

Controllers are unable to find core or inconsistently find and lose core. I’ve verified via tcpdump and netstat that 9003udp and 9100-9200tcp are listening, connectable from controllers, and receiving data.

With controller on .14 9 (OSX) I can consistently reproduce: find roon core, connect, restart, looking for core…, restart, maybe find core / maybe not, etc.

With a couple of recently installed controllers on OS X the discovery is stuck on ‘looking for core’

Using the scan feature (under looking -> help) there is no progress indicator or output.

What are some tests I can run logs to examine to determine what’s wrong here?