Thanks, sorry I’m such a n00b. I didn’t know how to get built-in help to work on podman (as in I don’t know how to execute udp-proxy-2020 except in a container, and if you execute it in a container I don’t know where the help output goes). I’ll try my best.

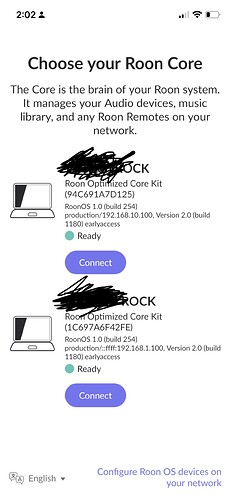

In any case, just to recap, trying to set up a single core to cover two homes. There was a core running at the secondary home behind the USG, but for now I’ve shut it down. The basic setup is:

Roon Core + endpoints -> UDM Pro (udp-proxy-2020) <------> USG 3 (udp-proxy-2020) --> endpoints

The Core is running at 192.168.1.100 on a subnet of 192.168.0.0/23, and the secondary home network is running on 192.168.10.0/23. They are connected by a site-to-site OpenVPN configured on the UDM Pro and USG 3, and the interfaces we want are br0 and tun1 on the UDM, and eth1 and vtun64 on the USG. I want to be able to run a remote at either location and have it work - to have the Core behind the UDM work with endpoints both behind the UDM and the USG.

The command that the shell script is running on the UDM is:

podman run -i -d --rm --net=host --name udp-proxy-2020 -e PORTS=9003 -e INTERFACES=br0,tun1 -e TIMEOUT=250 -e CACHETTL=90 -e EXTRA_ARGS=-L trace synfinatic/udp-proxy-2020:latest

The command that is running on the USG is:

sudo ./udp-proxy-2020 -L trace --port 9003 --interface eth1,vtun64 --fixed-ip=vtun64@192.168.1.100

(I’m not sure if the --fixed-ip is needed, but I’ve tried it both with and without. 192.168.51.51 is the OpenVPN tunnel IP on the UDM); results are very similar.

Results for the logs of the UDM are an endlessly repeated set of:

# podman logs 20f9a3409b6a

level=debug msg="br0: ifIndex: 12"

level=debug msg="br0 network: ip+net\t\tstring: 192.168.1.1/23"

level=debug msg="br0 network: ip+net\t\tstring: 2601:19b:700:518d::1/64"

level=debug msg="br0 network: ip+net\t\tstring: fe80::68d7:9aff:fe29:75aa/64"

level=debug msg="Listen: (main.Listen) {\n iname: (string) (len=3) \"br0\",\n netif: (*net.Interface)(0x40000573c0)({\n Index: (int) 12,\n MTU: (int) 1500,\n Name: (string) (len=3) \"br0\",\n HardwareAddr: (net.HardwareAddr) (len=6 cap=41524) 6a:d7:9a:29:75:aa,\n Flags: (net.Flags) up|broadcast|multicast\n }),\n ports: ([]int32) (len=1 cap=1) {\n (int32) 9003\n },\n ipaddr: (string) (len=13) \"192.168.1.255\",\n promisc: (bool) false,\n handle: (*pcap.Handle)(<nil>),\n writer: (*pcapgo.Writer)(<nil>),\n inwriter: (*pcapgo.Writer)(<nil>),\n outwriter: (*pcapgo.Writer)(<nil>),\n timeout: (time.Duration) 250ms,\n clientTTL: (time.Duration) 0s,\n sendpkt: (chan main.Send) (cap=100) 0x4000058660,\n clients: (map[string]time.Time) {\n }\n}\n"

level=debug msg="tun1: ifIndex: 43"

level=debug msg="Listen: (main.Listen) {\n iname: (string) (len=4) \"tun1\",\n netif: (*net.Interface)(0x400027a700)({\n Index: (int) 43,\n MTU: (int) 1500,\n Name: (string) (len=4) \"tun1\",\n HardwareAddr: (net.HardwareAddr) ,\n Flags: (net.Flags) up|pointtopoint|multicast\n }),\n ports: ([]int32) (len=1 cap=1) {\n (int32) 9003\n },\n ipaddr: (string) \"\",\n promisc: (bool) true,\n handle: (*pcap.Handle)(<nil>),\n writer: (*pcapgo.Writer)(<nil>),\n inwriter: (*pcapgo.Writer)(<nil>),\n outwriter: (*pcapgo.Writer)(<nil>),\n timeout: (time.Duration) 250ms,\n clientTTL: (time.Duration) 0s,\n sendpkt: (chan main.Send) (cap=100) 0x4000058720,\n clients: (map[string]time.Time) {\n }\n}\n"

level=debug msg="br0: applying BPF Filter: (udp port 9003) and (src net 192.168.0.0/23)"

level=debug msg="Opened pcap handle on br0"

level=debug msg="tun1: applying BPF Filter: (udp port 9003) and (src net 192.168.51.51/32)"

level=debug msg="Opened pcap handle on tun1"

level=debug msg="Initialization complete!"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(tun1) ticker"

level=debug msg="handlePackets(tun1) ticker"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(tun1) ticker"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(tun1) ticker"

level=debug msg="br0: received packet and fowarding onto other interfaces"

level=debug msg="tun1: sending out because we're not br0"

level=debug msg="processing packet from br0 on tun1"

level=debug msg="tun1: Unable to send packet; no discovered clients"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(tun1) ticker"

level=debug msg="handlePackets(br0) ticker"

level=debug msg="handlePackets(tun1) ticker"

The logs on the USG basically look like this:

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG eth1: received packet and fowarding onto other interfaces

DEBUG vtun64: sending out because we're not eth1

DEBUG eth1: received packet and fowarding onto other interfaces

DEBUG processing packet from eth1 on vtun64

DEBUG vtun64 => 192.168.51.51: packet len: 132

DEBUG vtun64: sending out because we're not eth1

DEBUG eth1: received packet and fowarding onto other interfaces

DEBUG vtun64: sending out because we're not eth1

DEBUG processing packet from eth1 on vtun64

DEBUG vtun64 => 192.168.51.51: packet len: 132

DEBUG processing packet from eth1 on vtun64

DEBUG vtun64 => 192.168.51.51: packet len: 132

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

DEBUG handlePackets(eth1) ticker

DEBUG handlePackets(vtun64) ticker

I can see you’ve dealt with this exact set of errors before - the level=debug msg="tun1: Unable to send packet; no discovered clients" seems to mean that no packets are being passed from the br0 on the UDM to tun1.

I think that’s confirmed because when I run a tcpdump on the UDM on br0 while restarting Roon I get the following:

# tcpdump -ni br0 -s 0 udp port 9003

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on br0, link-type EN10MB (Ethernet), capture size 262144 bytes

00:32:39.888512 IP 192.168.0.91.64282 > 192.168.1.255.9003: UDP, length 339

00:32:39.888660 IP 192.168.0.91.51259 > 192.168.1.255.9003: UDP, length 339

00:32:39.888769 IP 192.168.0.91.50307 > 192.168.1.255.9003: UDP, length 339

00:32:45.057133 IP 192.168.1.32.35879 > 192.168.1.255.9003: UDP, length 104

00:32:53.214127 IP 192.168.1.100.51544 > 192.168.1.1.9003: UDP, length 98

00:32:53.214387 IP 192.168.1.100.51544 > 192.168.1.1.9003: UDP, length 98

00:32:53.271749 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 98

00:32:53.389162 IP 192.168.1.100.51544 > 192.168.2.1.9003: UDP, length 98

00:32:53.399205 IP 192.168.1.100.51544 > 192.168.1.255.9003: UDP, length 98

00:32:53.399241 IP 192.168.1.100.51544 > 192.168.2.1.9003: UDP, length 98

00:32:53.409449 IP 192.168.1.100.51544 > 192.168.1.255.9003: UDP, length 98

00:32:53.446159 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 265

00:32:53.496647 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 98

00:32:53.602706 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 265

00:32:54.177941 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 98

00:32:54.267409 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 98

00:32:54.448583 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 98

00:32:54.601996 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 98

00:32:54.762812 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 98

00:32:54.780736 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 98

00:32:54.971433 IP 192.168.0.91.50664 > 192.168.1.255.9003: UDP, length 339

00:32:54.971634 IP 192.168.0.91.65506 > 192.168.1.255.9003: UDP, length 339

00:32:54.971779 IP 192.168.0.91.55883 > 192.168.1.255.9003: UDP, length 339

00:32:55.379288 IP 192.168.0.91.58545 > 192.168.1.1.9003: UDP, length 98

00:32:55.804988 IP 192.168.0.91.58545 > 192.168.1.255.9003: UDP, length 98

But when I run the tcpdump on the UDM on tun1 and close down and restart Roon, I’m only seeing inbound traffic from a single RAAT endpoint at 192.168.10.30 behind the USG (which must be working at passing packets in the other direction):

# tcpdump -ni tun1 -s 0 udp port 9003

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on tun1, link-type RAW (Raw IP), capture size 262144 bytes

00:34:53.273009 IP 192.168.10.30.52502 > 192.168.51.51.9003: UDP, length 104

00:34:53.278286 IP 192.168.10.30.52502 > 192.168.51.51.9003: UDP, length 104

00:34:53.278327 IP 192.168.10.30.55085 > 192.168.51.51.9003: UDP, length 104

00:35:53.395630 IP 192.168.10.30.52502 > 192.168.51.51.9003: UDP, length 104

00:35:53.395705 IP 192.168.10.30.52502 > 192.168.51.51.9003: UDP, length 104

00:35:53.395740 IP 192.168.10.30.55085 > 192.168.51.51.9003: UDP, length 104

In case it’s useful, here are the relevant portions of ifconfig from the UDM:

br0 Link encap:Ethernet HWaddr 6A:D7:9A:29:75:AA

inet addr:192.168.1.1 Bcast:0.0.0.0 Mask:255.255.254.0

inet6 addr: 2601:19b:700:518d::1/64 Scope:Global

inet6 addr: fe80::68d7:9aff:fe29:75aa/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:20115653 errors:0 dropped:1 overruns:0 frame:0

TX packets:81190735 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:9794685569 (9.1 GiB) TX bytes:109902935292 (102.3 GiB)

tun1 Link encap:UNSPEC HWaddr 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00

inet addr:192.168.51.51 P-t-P:192.168.50.50 Mask:255.255.255.255

inet6 addr: fe80::4107:b289:2df4:c8ce/64 Scope:Link

UP POINTOPOINT RUNNING NOARP MULTICAST MTU:1500 Metric:1

RX packets:43792 errors:0 dropped:0 overruns:0 frame:0

TX packets:40050 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:38001308 (36.2 MiB) TX bytes:3281215 (3.1 MiB)

Hope this is helpful. Many many thanks.