I dispute that, it is one of the original claims by JWM that have been debunked. Neither do any ´system-related distortion´ of cone drivers exist and no-one could so far explain to me what kind of inherent distortion that should be. Nor is the MST anyhow different from a conventional electrodynamic transducer in terms of voicemail mass, movement or diaphragm behavior.

In contrary, there are indications that the particular geometry of the MST´s voicecoil and diaphragm induce certain phenomena like distortion, resonance, out-of-phase movement and directivity errors originating from the diameter of the excited diaphragm area and the acoustic behavior of the material.

The two main factors that set the MST apart from other dynamic drivers are the voicecoil size (3" if I am not mistaken) and the fact that it is a suspension-less, surround-less bending wave diaphragm with high inner damping. This has implications particularly for its dynamic behavior (distortion, compression, resonances) as well as the directivity. These factors seemingly explain why the MST sounds very different.

That is nothing unusual and explainable by the fact that a large voicemail is combined with the aforementioned bending-wave diaphragm which is also very light.

There are other drivers covering a similar or larger frequency range (fullrange drivers as well as 1.5-way or coaxial designs), there are other bending-wave concepts and there are suspension-less or surround-less drivers on the market. They all sound very very different from the Manger hinting that this is not the main reason for its particular sound but rather other factors such as the directivity.

Concepts with AMT midranges exist and they sound very very different from the Manger.

What is the inherent problem of the cone drivers that the Manger does not bring?

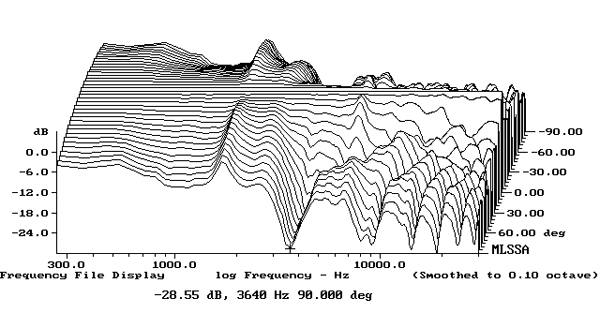

I agree that it sounds different, but not necessarily more ´real´ or ´natural´. My theory is that the different impression largely originates from the directivity as the MST is seemingly a resonance-laden, bending-wave design in the middle of its frequency range (1-4K) combined with conventional pistonic behavior below and a very large ring-radiator towards higher frequencies. It is pretty visible in Stereophile´s polar plot:

I suggest an interesting experiment: Listen to the Manger in a near-field environment in a very damped studio control room (or an anechoic chamber) and subsequently in a normal living room. They sound like they are two completely different types of loudspeakers supporting my theory that it is the directivity which is defining the particular sound.

It is interesting you are mentioning the Kii 3 as an example of utter discrepancy from the MST’s sound as that would mean debunking two essential claims made my Manger: That time-coherence matters and that the moving mass of pistonic drivers sounds unnatural. The concept of Kii is perfectioning the former via DSP to at least the level of the MST and the latter thanks to active cancellation similar to open-baffle or cardioid concepts. If Manger´s claims were true the Kii had to sound very similar in regard of timing, impulse response and dynamics.

But it does not, you rather describe it as an antithesis ´natural´ vs. ´soulless´ (I would not subscribe to the descriptions, but we can agree on the existince of huge differences). Maybe your impression also has to do with directivity as the Kii 3 represents an antithesis to the Manger par excellence: constant medium-high directivity in the midrange and slightly decreasing DI with the tweeter kicking in (Kii) vs. omnidirectional/diffuse soundfield in the midrange plus a steep step towards very high DI in the treble area (Manger).

I do not see any hint this was the case with the success of Kii. I guess people were rather easily convinced that the concept of actively suppressing reflections and booming in a compact speaker design was what they really needed in their rooms and I fully support this idea.