Well, it breaks the DAC-specific corrections (whatever they are), and the supposed benefits.

I’m going to try to run DSP through a windows driver APO EQ. I don’t think Roon decodes MQA yet so i will have to go through Tidal desktop app initially. This should place the DSP on the output driver which would be after the first level of unfolding by Tidal. Here are some links below on how I plan to test:

http://www.jdm12.ch/Audio/2016_Windows-DSP.asp

The second link is for headphones but it’s the same process for loudspeakers.

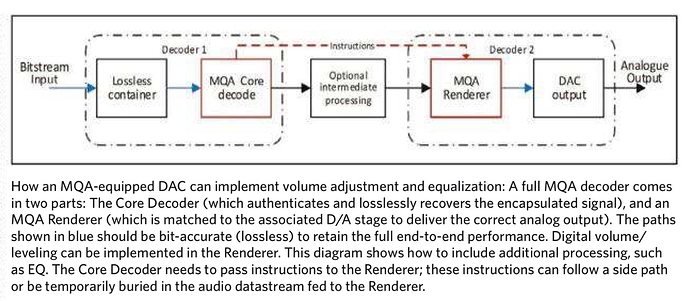

I fully expect Roon to offer the majority of its DSP functionality once its MQA support appears. I’m pretty sure the MQA software decoder library supports the side-chain of DSP which Bob explicitly describes as others have pointed out. Products such as the Blusound streamer already use this functionality to do basic EQ and volume control without destroying the MQA render data which the DAC uses. Perhaps MQA impose limitations on the type of DSP applied (must be minimum phase, etc), but this seems entirely possible from a technical POV.

I also anticipate a surge in Roon subscribers once the above is released. MQA + DSP is the Holy Grail for me, and I expect others as well.

Room correction or EQ can only be done after the 1st decode, normally first decode will resulted in 88.2/96kHz, this is then pass through an optional EQ. My understanding is provided the original sample rate and digital filters selections information can survive from the EQ, this is then pass through second stage called ‘rendering’ which take over the internal over sampling digital filters inside the DAC chip. The original sampling information tells the renderer how far it needs to up/oversample to match the original sample rate of the recordings while digital filter information tell which types of digital impulse filters are to be selected.

That’s why the second stage must be coupled directly to a DAC chip otherwise it cannot override the internal DAC oversampling digital filters and uses its own. MQA does its own up/over over sampling with its impulse digital filters, this is channeled to the DAC for direct conversion. Take note, the internal DAC over sampling digital filters are disabled and use MQA own up/over sampling and impulse digital filters.

Added: I think this going be major challenge for Roon; putting the 1st decode is easy but putting the second stage ‘renderer’ inside Roon, how do you tell the external DAC to override its internal over sampling digital filters via USB connection? By using MQA own ‘renderer’ can correctly ‘profiled’ the DAC using a direct connection. Unless the external DAC’s over sampling digital filters can manually switch off but not many DACs in the market can do, except those can do NOS DAC.

The only way to disable the DACs oversampling is to present it with its maximum sampling rate. However delta sigma DACs always oversample beyond their max input rate. MQA render details also include dither and noise shaping commands. I don’t see how a software only solution would work unless all this was done in software as well.

MQA has pretty much said that having a software solution of the 2nd stage is possible, but it isn’t going to happen.

The reason is obvious: that eliminates the need to buy MQA HW (fees) and eliminates the control the present system gives MQA over the whole playback process.

It also makes them much more vulnerable to reverse engineering. At present reverse engineering the 2nd stage is very difficult, if not nearly impossible.

In fact the first unfold(decoding) is the hardest(so they charge more fees) then the subsequent ‘rendering’ which is basically up-sampling with appropriate impulse filters. Auralic ‘in house’ decoding and rendering is one good example.

Optional room correction can be applied after the first unfold without affecting the decoding. However, it is not that straight forward, the first decoding will always yield 88.2/96kHz max, subsequent rendering restore the sampling frequencies higher than 88.2/96kHz if the original recording is higher than that. Say if the original recording is 352.8kHz and encoded with a flag, this is passed through first stage decoding which yields 88.2kHz. The flag will tell how much ‘upsampling’ is needed in the renderer stage and then apply the multipliers which restore to 352.8kHz. Of course, the flag not carry this information but also the selection of so called optimised impulse filter need to apply at the final stage(renderer).

In the May issue of Stereophile, I came across a new somewhat different from the previous when it comes to Room correction… see below:

Take note the new red highlighted path called ‘instructions’ from the decoder to the renderer. As for my understanding, this ‘instructions’ actually tell the renderer which up-sampling and de-blurring filters (There are possibly 16 of them) to select.

This ‘instructions’ will probably not able to survive if it goes through ‘optional immediate processing’ or called it DSP or any form of room correction. This open up one possibility that such implementation can also be applied to software decoding and rendering in one package.

“side path”.

Has there been any large sample size testing whether Audiophiles can hear any difference, ie: improvement of higher that 24/96 original content? I’m with Mark Waldrep on that issue. I think anything higher than that is beyond human comprehension. I think it would be prudent for large Audio Shows, like the Munich show should have a informal room ABX testing. There is always lots of argument about this issue but I am sure a subjective experience vote would not be far random. 50/50%

Hi-Res is not so much about hearing more music contents but more on relaxing the digital filters requirements.

Hi-RES or MQA, in my opinion, most important is recording quality, and a pair of good speakers. Also good room acoustics/room correction.

And when you get it all in one package, your in clover…

Just noting the current position regarding MQA and Room Correction in Roon as per this KB page.

Roon can decode the first unfold in software (to 88.2/96 kHz), convolve a room correction filter in the DSP Engine while preserving MQA rendering information, and then send the signal to an MQA Renderer for it to perform the second unfold in hardware.

If your convolution filter contains not only frequency domain correction but also time domain correction, then non linear phase MQA rendering is going to wreak havoc with the time domain correction. Unless you can generate the convolution filter measurements with MQA rendering in circuit, but good luck with that.

AJ

So essentially we are saying the mechanism is there for it to work but we don’t know how effective it would be given that MQA supposedly impacts phase linearity?

I up-sample everything including MQA to DSD, and let Roon do the first MQA unfold. I use it with room correction and as far as I can tell it works flawlessly. Which means you don’t even need a MQA DAC for it (I use this solution even though I have a MQA DAC).

Roon’s solution to MQA was a very good one in my opinion.

It depends how much MQA you want to do. I use Roon to software decode the first unfold (88.2/96 kHz) then send it to HQ Player which convolves room EQ, upsamples to DSD 512 and sends it to a NOS DAC. I don’t bother with the second unfolding by MQA at all, preferring HQP’s xtr-2s-mp filter and an apodising 7th order DSD modulator.

I generally like the sound of the MQA Masters available on Tidal using the above. But I don’t regard it as the Holy Grail and am cautious about it’s longer term implications if universally adopted.

In my opinion Room EQ is far more important than any messing about with upsampling and filters. Improve the physical listening room, convolve an EQ file and fit MQA around that if you like.