What’s your explanation to this phenomena, what’s his, what’s @jussi_laako’s, does John Siau have one, and could it simply be distortion artefacts being euphonic ?

I have read several explanations, and I do not rule out the possibility you mentioned. I like to think that there is nothing wrong for people to love distortion, e.g. from single ended triode.

A certain proprietary Hi-Res format that people hate even adds ultrasonic stuff on purpose, and people use this to discredit the format. There must be reasons behind it, euphonic or not.

Oh, totally agreed !!!

Given who we’re dealing with there, malice would be my first hypothesis, with incompetence a close second.

Anyway, let’s try not to wake up the three-letter word trolls, but the issue there is more for people who don’t like gratuitous distortion, and thus aren’t into that particular flavor of totalitariano-sectarian approach.

Maybe a there’s a space there for you guys to do that stuff in software ? How about playing Carver and offering SET / three-letter-word format / whatever else enhancements to those who like 'em ?

Mark Waldrep, who’s a proponent of HiRes, needs you, your ears, and your systems, for a little study on the audibility of higher than 16/44: http://www.realhd-audio.com/?p=6713 .

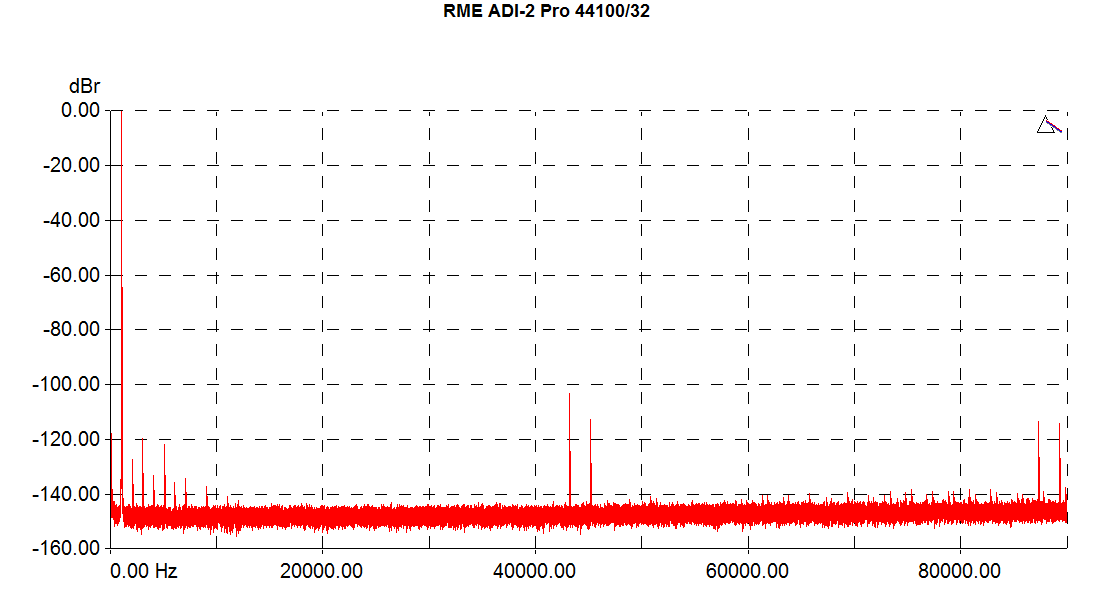

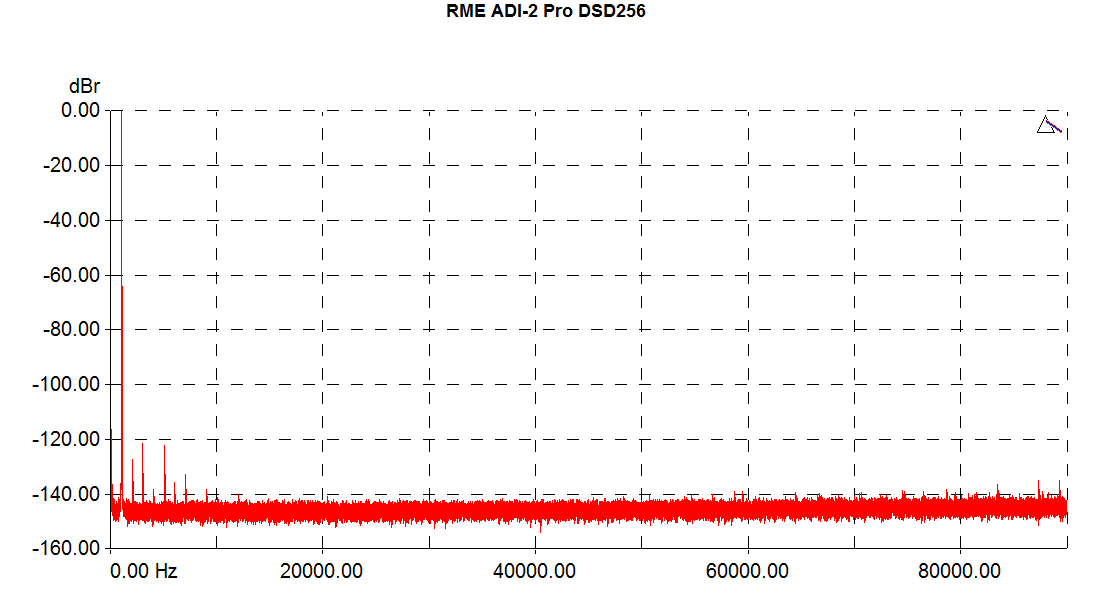

ADI-2 Pro has lowest distortion when run in DSD Direct mode at DSD256. Changing the DSD noise filter between 50 and 150 kHz changes how the DAC chip operates and how much ultrasonic noise there is left above 100 kHz. This setting doesn’t change distortion figures.

I can also hear difference between 50 and 150 kHz settings even from RedBook sources. The difference is small, but it is there.

I don’t know where people come up with “euphonic distortion”.

It´s starting to feel a bit… almost hostile here…  .

.

For now I choose to not reply on the actual discussion, but instead to try and clarify something.

Yes @jussi_laako, your measurement shows no change in distortion figures. This is logical, and expected. (It also shows that you have a great D/A there, congrats !).

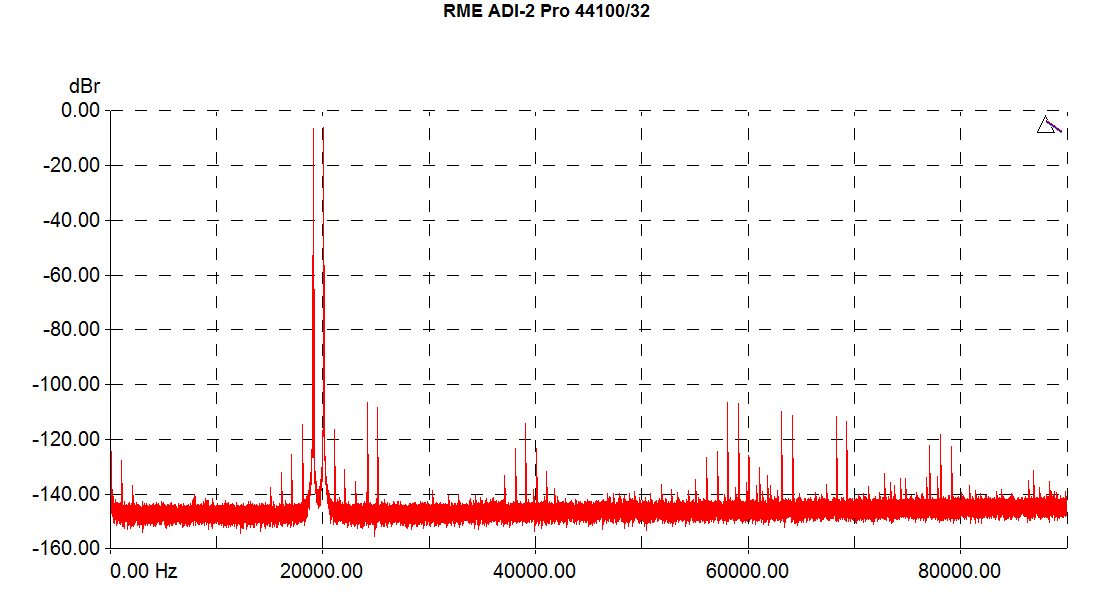

You are measuring a single tone, an therefore only measure harmonic distortion. But people here are not talking about harmonics, it´s intermodulation they worry about.

Now go ahead, and measure TWO simultaneous tones. Preferably close to each other, closer than 20kHz. For instance 25 and 28kHz.

Most likely, you will still not observe (much) intermodulation distortion at your output, as you simply have a great D/A.

Yes, the high resolution did indeed not damage the data in the file itself, and it can be converted to analog just fine.

It´s when you feed this output to an amplifier+speaker, that intermodulation is generated in that chain. Try and measure it, if you like.

Obivously, this is not your fault. The hi-res stream itself is perfectly fine, and perhaps even better than that. The trouble only arises, once you convert that output into actual acoustical energy. And unfortunately, that last conversion is a necessary step for most of us

.

.

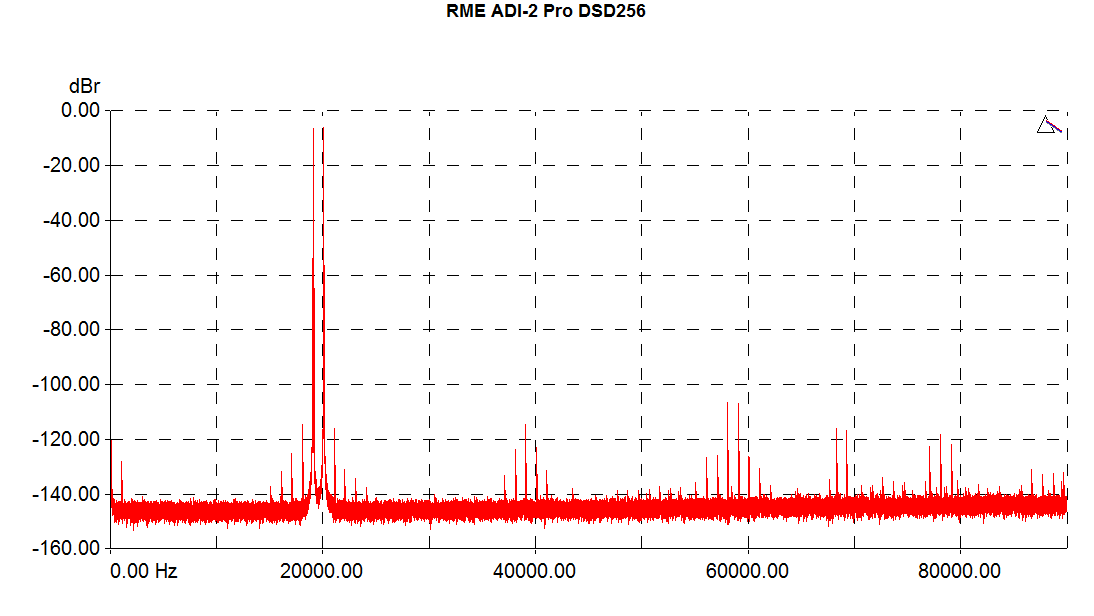

Exactly! That is specifically where this DSD256 is much better than PCM inputs!

Sure…

Yes I do that too. No matter how you measure it, but the distortion is lower with DSD256 inputs.

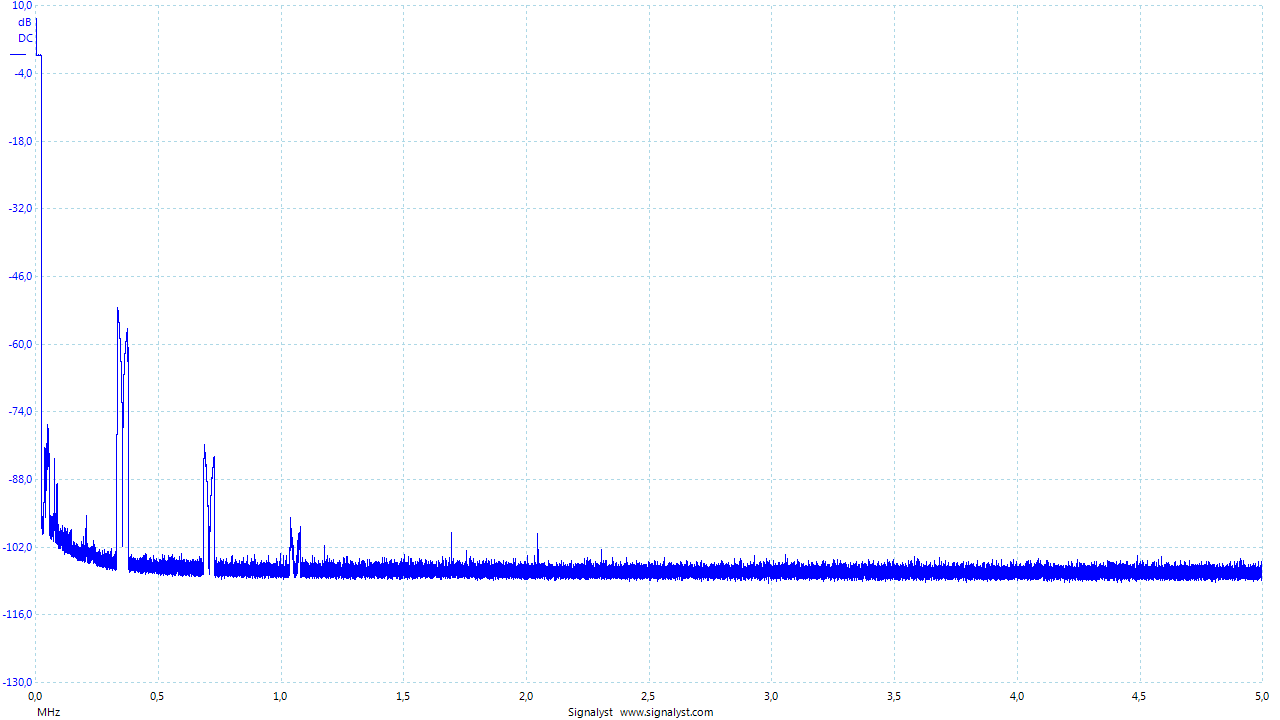

Problem with PCM inputs is related to correlated images around multiples of digital filter output rate, because due to DSP power constraints, the digital filter in DAC chips is only doing small oversampling factor and rest is done using S/H (copying same sample multiple times). Due to the 8x digital filter, there are images around multiples of 352.8/384k. Not to even mention the insufficient 100 dB attenuation (meaning about 17-bit reconstruction accuracy). Those images are correlated with the input and can intermodulate with each other as you can see above from the 2 kHz tone. This can create distortion that flavors the sound.

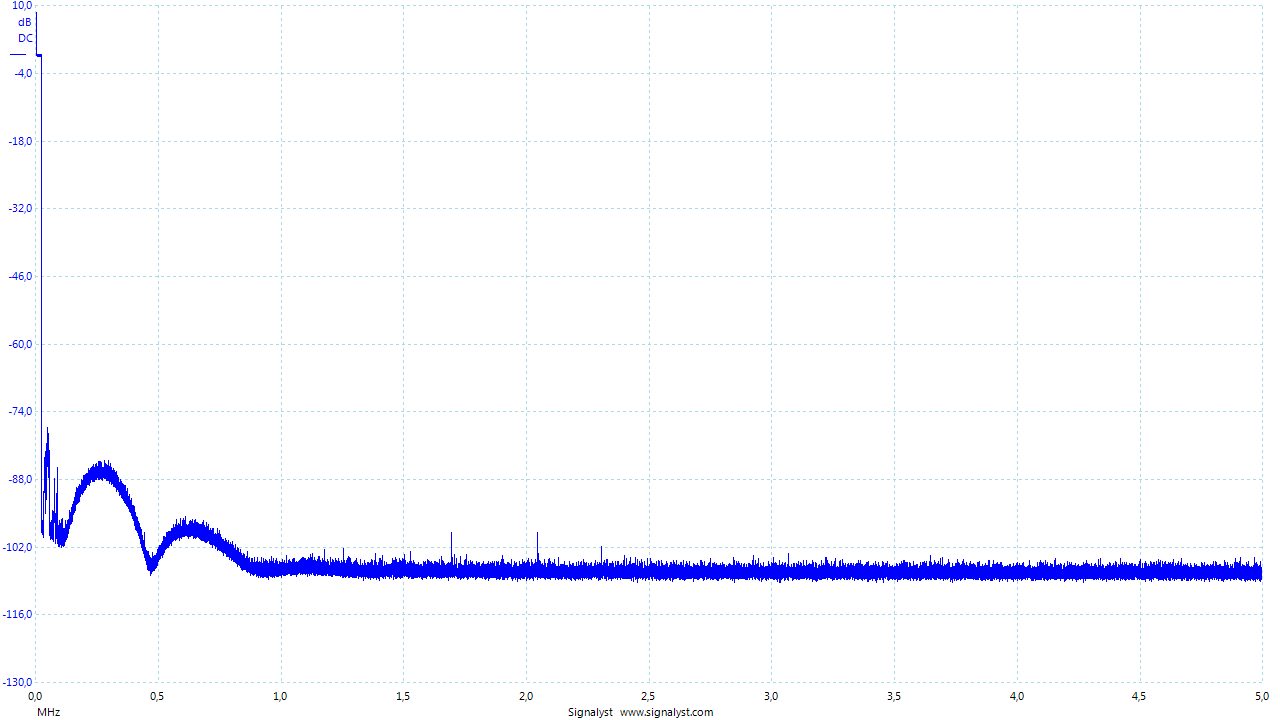

While when I run 256x digital filters (with 240 dB attenuation, meaning about 40-bit reconstruction accuracy) on a computer to 11.2/12.3 MHz DSD rate, there are no images because the analog filter is able to remove those completely. What ever small amount of noise there is left is uncorrelated and thus any intermodulation of that would be noise as well. So if you would hear intermodulation products of the noise, it would sound like a tape hiss. It is not distortion in any shape or form.

Above the difference between peak image level and peak noise level is about 30 dB which means about 32x difference!

While if you take some newer AKM chip model, like the one used on EVGA NU Audio card, there is no noise left at all with DSD256 inputs, so the difference to PCM is even more pronounced.

Yes, the Nu has been a worthwhile upgrade.

I recorded a load of my vinyl at 96/24 of albums that I haven’t got on CD. I could have recorded albums that I have copied from CD to compare the difference but to be honest I can’t be bothered, I can just play the vinyl instead. I wonder though how people can enjoy their music with so much worry about what others think about their system/download qualities/speaker placement etc etc. My music only has to impress my ears and if they are happy so am I. Advice is a great thing but at some time you just have to trust what you like in my opinion.

Now that is one excellent reply @jussi_laako !

I see that you are a lot more knowledgeable on digital processing, than I am.

I am not a good discussion partner in that field for you - so, perhaps better if I skip that.

(But in any case : your writing makes perfect sense. Good job!).

I do notice, that you chose to use two signals that ´fit´ inside the bandwidth of 44.1kHz sampled files.

In hindsight, I should have phrased my question less ambiguous. Take two sine waves, at least 1 of them slightly outside the bandwidth of regular 44.1kHz files, but close to each other etc. And do spectrum analysis on the acoustical output at your loudspeaker.

I am sure you´ll agree : you will then observe intermodulation in the ´audible´ band, when using a high res file. Which can not be there, when sampled @44.1kHz rate. Simply because there is nothing to modulate with. Anything that is not considered to be audio, is filtered out, so it cannot do damage to the actual analog audio.

(Not trying to put words in your mouth, feel free to say so if you don´t agree. And yes, I understand that different opinions exist on the width of audible bandwidth. The further we stretch this bandwidth, the higher the chances for additional intermodulation <20kHz.).

This is what I, or other people, mean by avoidable distortion. I wholeheartedly agree : my ears do not perceive this as a problem, at least not with regular music. But whether we like it or not, the situation is there. With artificial test signals, it´s not so hard to hear. I have trouble agreeing with an improvement in a band that is not (directly) audible, if that means I have to make sacrifices in the actual audio band.

So, in a way that addresses @garye his concerns, I guess.

For me personally : This wasn´t my private-me talking. I personally enjoy music, whether hi-res or even really bad recordings, and not the system itself. I would indeed hate myself, if I were listening in such an analytic way for music enjoyment. I often don´t even hear differences like these, myself.

Perhaps it was more ´engineer-me´. Eager to find new paths that can improve our world. But also slightly concerned about the way that we´re doing that right now. As for ´others´ : I´m not worried about them. As long as we mean individuals. Maybe I´m a bit naive, but I believe that people can make their own balanced judgments. To do that, it helps to know all sides of the story (and that includes ´mine´).

I hope I know something after having been working on the field since early 90’s.

Test signals are standard 19 + 20 kHz IMD test. When the standard has been created, the test signals have been defined to be within audio band. Since most content is 44.1/16, the test signals need to fit within Nyquist frequency of that format. With 44.1k source you cannot place test signals outside of bandwidth of a 44.1k sampled system.

It doesn’t change the PCM vs DSD results in any way, of course loudspeakers have higher distortion figures, but for IMD to happen you would need to have something that triggers the IMD. This is pure math.

Except the problems I explained in earlier posts. Since the built-in filtering of DACs at 44.1k inputs is insufficient to filter those out properly. These problems are both higher in level and in frequency than musical content on a hires recording would be. That’s why I’m running the DAC at DSD256 instead.

Measurement test files are 44.1/32 data, either played as-is, or through good quality conversion to DSD256.

“Fixing” IMD by limiting music bandwidth and using 44.1k sampling rate for delivery is creating much more problems than it is “fixing”. In addition, you shouldn’t be trying to “fix” problems of some random playback device at the source content production step. My amps, loudspeakers and headphones can go to 50+ kHz cleanly so they can certainly cover the content 96k PCM can deliver. Except the Dynaudio speakers in the secondary set that cleanly roll-off above 20 kHz. If you are worried about distortion and ultrasonic frequencies, avoid loudspeakers with metal dome tweeters, like I do. If you want, you can buy 96/24 file and play it to the DAC at 44.1/16 if you like. You would still have the original, should you wish to switch to playing it in some other way at later time. But if you get 44.1/16 file, you cannot invert the loss.

IOW, to return to my original response, DSD256 doesn’t have “euphonic distortion” people like. It is the PCM that has “non-euphonic distortion” that people don’t like (well, maybe some do).

Love your reply @jussi_laako!

An absolutely great, convincing and believable and plea for DSD ! Thank you, and I mean that.

However. This topic was about comparisions between hi-res PCM, and lower-res PCM.

I assume that you understand, that an explanation why DSD is better, can never be an answer to that. No matter how good your explanation was ![]() .

.

(Yes, I see that one person added DSD in HQplayer to the discussion, and I perfectly understand that you had to respond on that).

Of course you can’t :). But it is easily demonstrable. Play a high res file with a 20kHz sine. And another one with a 23kHz sine.

It will depend a bit on your ears, but you probably don’t hear both of them. (Mechanical noises of loudspeaker excluded).

Now mix them, and listen again on a variety of devices. You should be able to hear a faint 3kHz, in the middle of the audible band, in some of them.

Perhaps not in your own system : if bandwidth is too limited, or nonlinear behaviour really good, then it can be too hard to detect.

But you will find systems, where you can easily detect the undesired signal. And obviously, if you stretch bandwidth to 192kHz, you leave a lot more room for such events to occur.

If this (what I consider to be) inaudible information had been filtered out, like in a 44.1kHz file, it could not have done any damage to the actual ‘audible’ band.

Therefore, I cannot agree to the following quote if we remove DSD in this discussion. Unless you can explain which problems are created, when using 44.1kHz vs some other higher PCM sample rate.

Okay. Would you mind to clarify your definition on ‘clean’ ?

This is actually the field that I am confident talking about strongly. A typical highest quality tweeterwill give a 2nd order harmonic at approx. -50dB, as a reponse to 10kHz at a reasonably high listening level. Higher orders are usually a bit lower in those types. And this number rises, with increasing frequency.

Do you consider -50dB to be minor and unproblematic? How do you relate that to your DSD problem solving, that solves trouble <<-100dB ?

By the way - a typical tweeter cannot radiate 50kHz properly, purely dictated by its large physical dimensions. They get in trouble,long before. 10kHz is already _ way_ troublesome. I understand your recommendation on non-metallic tweeters - do not necessarily agree -, but this is effectively the advice to apply a lowpass filter. Which I recommended anyway :).

Perhaps you’re surprised, but I really do agree with you here. I had already written something like that, above. The hi-res file itself is most likely a more accurate representation of the ‘original event’. The effort that is put into creating these, should not be stopped.

What should be stopped in my opinion, is the people’s solid belief that those files will always give a better experience. This is highly dependant on your specific playback system, and can in fact lead to worse results. Seeing that people stream this to all sorts of devices (phones, hifi systems of lesser quality, great systems that get problematic >20kHz), I don’t think it’s bad advice. In those cases, it’s a waste of resources…

I didn’t want to talk about DSD, but since someone questioned the mastering difference, my point was to explain that there are people who can hear a difference from Hi-Res without the master being different - I hope the difference is good, but if it’s technically worse in some playback chain, especially with people preferring the (possibly or unproven) “worse” presentation I’m ok with that. As long as people accept that Hi-Res sounds different, that’s enough for me to disprove “make no sense” in the title.

I’ll happily leave the issue of whether it’s audibly better or worse for users to decide.

Hi wklie. It was me. And I was questioning if people are comparing what they think they are comparing. Most of the time I highly doubt it. My guess is most go “I like this!”. But that is no good argument when someone claims “better”.

Also that some has said they hear a difference doesn’t necessarily make it so.

Scepticism aside, I really like how the thread as evolved and I hope it keeps going! It is an interesting read.

No 3 kHz here… ![]()

I mean no detectable IMD.

But let’s see your measurements demonstrating the problem in turn.

You responded to my response to that. So I of course assume you are still within the same topic…

For those in doubt, I responded with results using same software, and DAC.

My tweeters are ribbon / AMT-type which handle high frequencies pretty cleanly. But 90% of my listening is through headphones like Sennheiser HD800.

And that still doesn’t mean that the speaker would generate IMD tones, which is another thing. For example Dynaudio tweeter rolls off above 20 kHz, and frequencies above just disappear into nothing, just like you would have with a low-pass filter (essentially just an inductor plus physical damping). Main problem with metal dome tweeters are fundamental resonances they have above 20 kHz that have 20+ dB peak in the response. While the Dynaudio silk dome tweeter has resonance frequency below the cross-over frequency.

On those systems, you can run necessary correction filters at the playback side. It shouldn’t concern the distribution media.

Resource consumption for something like 192/24 stereo is totally nothing these days. If we’d talk about something like 1.5 MHz 32-bit PCM, it could be a little more. Netflix 4K video streaming over internet is ~16 Mbps. I can get that much pretty much any time over 4G mobile data, almost anywhere. You can do quite a lot of audio for that, and that is still minuscule for current 100+ Mbps internet connections and 8+ TB HDD’s. Now we are going for 5G networks where one could do 5.1 channels of uncompressed DSD256 streaming realtime.

I spent two fairly long posts about problems of 44.1 kHz sampling rate. And I didn’t go into problems of the format itself, but just to the problems DACs have in dealing with such a format. Lo-res formats like 44.1/16 require substantial amount of very careful DSP to produce anything useful. Higher the source resolution, less sophisticated DSP you need in order to decently reconstruct the original analog waveform.

Then another can of worms is the format itself. But discussing all that is already worth of two books of material, that cannot be covered here in simple forum posts.

There is no way you can generalize like that. The least you can generalize someone’s experience on something, even disregarding the system used.

The vast majority of people who measure the Hi-End equipment and critically listen to them them do not find poor ultra-HF performance or find the hi-end equipment to sound inferior. In fact, the opposite is true. In fact, this is the ONLY guy I have ever read that even suggests that. Granted, on rare occasions, a unit may not perform well, but it almost always gets rave reviews for its sound, and maybe once every decade or so, the units gets a bad review. A hi-end manufacturer can not afford to put out units that don’t perform extremely well both on the bench, and in the listening room. If they did, they would be out of business quickly.

There is almost zero musical content above 10kHz. But, the higher the res, the better captured everything up to that: better spatial capture, better ambiance, better attacks and decays, etc. Due to limited space, I have to settle for a near-field, but great system (a 5 figure computer system). When I play hi res files, I hear detail that is totally lost in lower res files. I ofter hear on hi res files sounds far off to the left, or far off to the right, well beyond the speakers, and sometimes far behind me. I never, ever get this with Red Book files, or even 44.1/24. The higher the res, the more spacious my system becomes.

Go to the 2L site, where you can listen to quite a few tracks at virtually all resolutions (including MQA), and all from the same masters. On my system, it is very clear that each step up in res benefits the music, EXCEPT for the MQA tracks. MQA never sounded even as good as Red Book. Now, that is all based on MY system, with MY ears and MY brain. Some of you will get the same results, some totally different.

Okay. Will do that asap. I wasn’t aware that you needed that, and I don’t normally proof things that I consider to be established.

Need to find my my mic cable first; I have no idea in my current messy living situation ![]()

![]() .

.

I have two systems that show this : a good but cheaper home studio, and a bit more expensive home expensive home theater (Anthem + Revel F206). Both give audible response to dual ultrasonic stimuli, the home studio in a pretty extreme way. Both are fully solved, when a highpass filter is applied.

Would you mind if that measurement shows the response of a chain of devices ? In other words : if the measurement doesn’t prove where the IMD is generated in that chain (dac → amp → speaker) ? Proving that is a whole lot more work, and I believe not relevant to this discussion.

Please reply on this question, so I can know if I should save myself the trouble, or find an alternative.

Your section on speakers : I have a whole lot to comment on it, but frankly, I don’t know where to start. Let me just pick the most important one only :

That’s not describing sufficiently, why tweeters have high freq limitations. I do not know whether you meant electrical inductance, or inductance as an electrical analogy to mechanical compliance or mass. If this was the major reason(s), you would observe a -6dB/oct LPF slope anywhere, Or -12 when perfctly summed. And you don’t. Typically declines much, much, much steeper. Yes, an ‘intrinsic’ lowpass filter does play a role, but is only a minor reason for an upper frequency limit.

In case you meant electrical induction : Just observe the impedance profile of any typical tweeter. At 40kHz, you will typically find 10-20 ohms or so. In other words : there is still plenty room, to put a lot of energy in that system. You would be correct if all that energy was then converted to heat. But unfortunately, that’s not what happens.

What happens mechanically in your soft-dome example : at very high frequencies, the diafragm can no longer act as a ridid piston. It is not rigid enough, to follow the rapid movements of the voice coil. It is flexing, more and more with increasing frequency. Eventually only the sides can follow a little bit, but this is not enough radiating area to produce any meaningful level of sound.

I’ll admit : the voice coil is indeed starting to be restriced in its capacity to move. But the point is : it DOES move.

Now, when an additional sine wave comes into play. And the IMD product( ref1) of both sines waves are, let’s say 3kHz. The voice coil will move at the 3kHz rate, and so will the diafragm. It is rigid enough to do that. And sound is then emitted. (ref1 = Let’s not forget that loudspeaker motors exhibit serious nonlinear behavior, no matter how good they are)

(In case of a rigid transducer, like a metallic one, something else it at play. There, the diafragm will be rigid enough to obey the voice coil. And sound will be emitted.

The trouble is, when the emitted wavelength gets short compared to the dimensions of the diafragm. When you observe the sound (=complex sum of the sound of every single point of radiation) from a perfectly right angle, you will observe it. But once you observe at different angles, serious phase errors occur in that summation. For many angles, the sum will be close to zero. Effectively rendering it (almost) useless output.).

This is certainly not a complete explanation on that upper limit. I indended it to be an easy-ish read for people not too informed on the subject.

Again, please reply on the question in the 2nd paragraph, so I know what to supply.

I can’t help but feel that we agree, but use a different ‘language’ to express what we mean. Bandwidth should be limited, at least for systems that experience trouble >20kHz.

We just don’t necessarily agree WHERE to apply that filter in the chain of distribution, and HOW (at which frequency, and perhaps other parameters).

I just tested once again on my three systems:

- Office headphone rig with Sennheiser HD800

- Listening room with Elac speakers having JET (AMT) tweeters

- Living room system with Dynaudio speakers (silk dome)

Tested with two IMD test signals; 149 + 150 kHz and 39 + 40 kHz, 0 dBFS digital level. Volume set to as high as I would ever listen. I hear only silence. In addition, since the Dynaudio speakers don’t go as high up as HD800 or Elac, I also tested those with 24+25 kHz tone which also resulted in silence (to my ears).

For systems that have such problems, it should be fixed in that particular system and certainly not with the source material!

If your system has such problems, you will certainly have nasty IMD problems from most DACs with 44.1k PCM inputs because they have fair amount of images at multiples of 352.8 kHz built-in digital filter output rate! Since IMD products of those are between positive and negative frequencies it is even more unnatural than normal IMD would be. In addition, frequencies and levels are higher than what you would encounter with musical content! This is one of the reason for “harsh digital sound” that many people have experienced and some may mistake for “detail” because the sound becomes harder.