First, thank you for commenting on my review. Bad or good, I rather see a response from the >company than not.

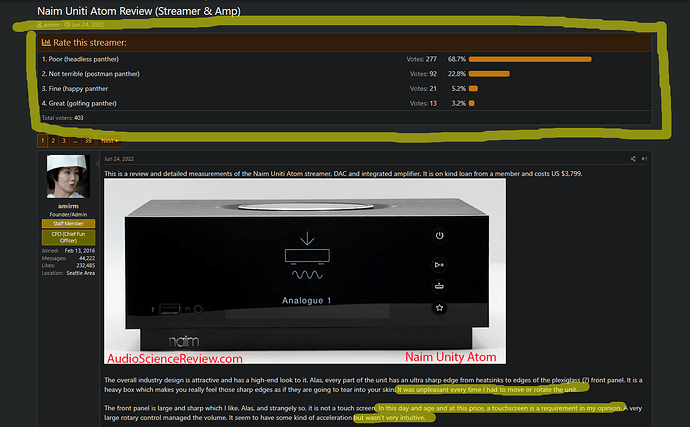

Sure. I’m talking to you while others chose to rightfully ignore you. This is not because you have done measurements, that’s great, but because how you later interpret them (or actually DONT interpret) and then handle them on your bizarrely constructed forum. The whole thing of you being THE authority to “approve and disapprove” a given piece of equipment , then the whole voting system where you unleash the mob on a product- this is the real problem. Who in the right mind would want to take part in this?

As you have challenged the very accomplished designers of audio in this very cavalier judgmental way, no wonder you did not hear back from them.

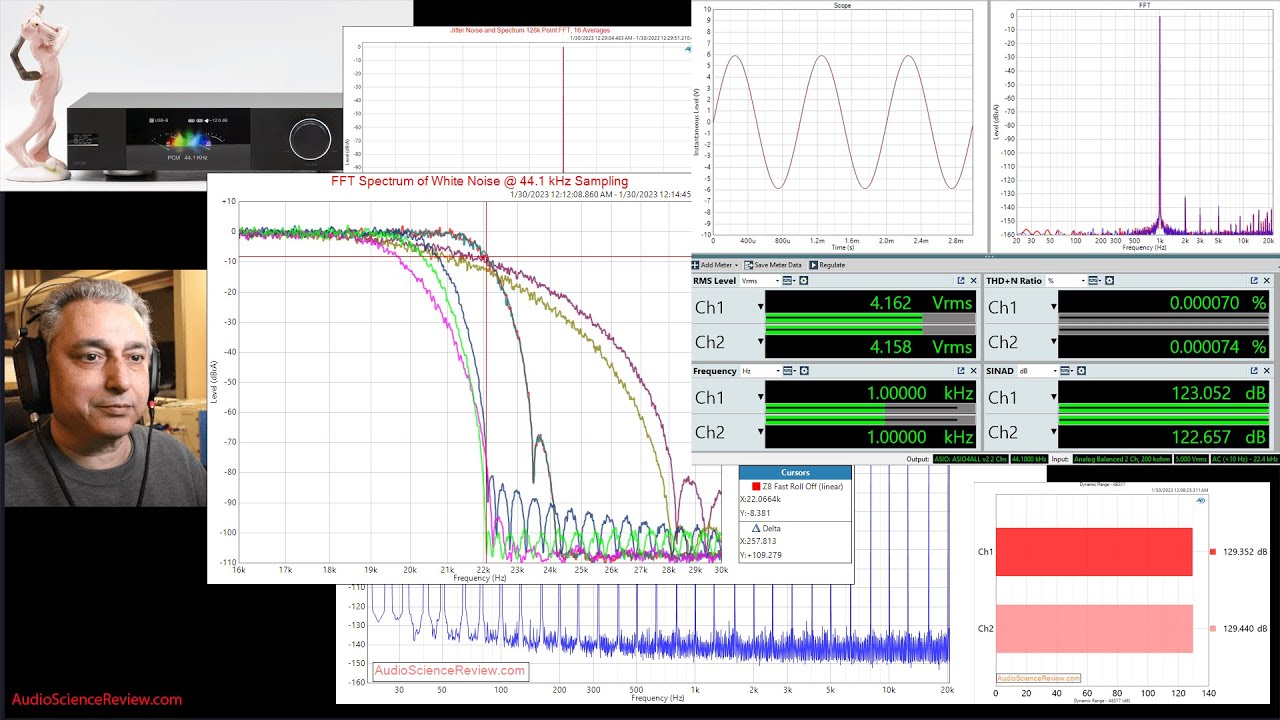

If you measured something , reached out to designer, showed them potential problems, verified that these are indeed problems (like this 2nd harmonic you got wrong with Mytek), this would be both professional and courteous and maybe create an opening for something valuable.

But the current style, NO, too divisive, you’ll end up with a bunch of hardcore followers that cheer you and everybody else who’ll just ignore ASR. Most audio companies own Audio Precision, they can measure don’t need your AP.

As to your comment, we have been measuring 0 to 60 times of cars for decades, doesn’t mean they are obsolete because of that.

People buy cars after a test drive. That’s usually the first thing.

Did you listen to BB2 on good speakers? A/Bed it with Eversolo? How was the soundstage on either?

There is a reason noise and distortion is still the top metric in audio gear: neither is wanted or desired in high-fidelity equipment.

This is this mid 20th century approach for a generic mass produced gear. We now know a lot more beyond this and you are still stuck in mid 20th century…

Noise, yes, I agree , generally not desirable.

But the crux of the matter is that distortion are desirable if they are the right one. And the time/phase alignment of these distortion. Why don’t you dig deepr on this subject.

Like maybe that a Stradivarius sounds so good and costs $1 milion because it produces right harmonics?. Or you would rather have a “clean” sound of strings without a wooden box? Sure that virtuoso violin player could have gotten a chinese violin on alibaba for $100.

You can hear this with the BB2 with the HAT ™ function. When you engage HAT which boosts 2nd harmonic by 12dB you don’t hear it as an unpleasant distortion but as a touch of pleasant groove. This is the whole point that you don’t seem to understand that in eyes of many people outright destroys your theory that SINAD nr directly correlates with “sound quality”

Am I right that you are saying this? Better SINAD= Better sound? If you say YES, you should loose the right to judge the equipment sound quality right here because this is a CARDINAL ERROR. The whole logic of good vs bad at ASR is built on this single fact.

So if it is a YES, then I have 2nd Q following on my studio tape example. Q: If SINAD is the metric, what does sound better: a 1/2" 30IPS studio tape (SNR of 60dB) or the $9 Apple dongle (SNR 96dB). It would be the Apple dongle , right?

So, since the sound from these tapes is 90% of the sound on hi-res Qobuz (not the digital sinewaves from you AP), how do you now comment about these 60dB, the tape distortion and playback of all this? What matters and what doesn’t , please elaborate…

You speak of brain and psychoacoustics. The latter is part of my professional career and in many reviews, I apply that to what is measured to determine level of audibility. You can read more about me here: A bit about your host… | Audio Science Review (ASR) Forum

Level of audibility is related to the background noise character - in other words it’s the background sounds that determine how well you can hear the main sound. And the sound itself too - makes sense right? This is discussed somewhere on ASR where some figures seemed to be arbitrarily posted numbers of -120dB. This is not a scientific approach. Threshold of audibility is not constant.

May I ask what is your professional qualifications are in this regard?

I though I mentioned this in the first post: I have studied electronics and acoustics, worked for many year in largest NY City recording studios and ran Mytek for 30 years where we are known for best professional converters and later excellent award winning hifi. I designed my first mastering 18 bit ADC in NYC in 1991. My career follows the complete arc of digital audio history: from the experience of early 1630 Sony recorders at the Hit Factory in 1989, through the first 20bit and the 9624 converters of the 90s, DSD in 2000+ (Mytek made a master DSD recorder for Sony) and then MQA in 2015 to current hi-res digital today (768k/32bit and DSD512).

There were very similar discussions in early 2000s on DSD: how bad DSD measures, it has all that noise etc. Same claims of SINAD crap as here. Yet 20 years later DSD is still considered the best sounding digital (btw because it does not have digital filters).

Reading your posts, you seem to be talking about folklore like some distortion at these very low levels is desired. There is not one piece of research or controlled testing that backs this.

? There is plenty of AES papers on the subject. And the “folklore” is called experience.

Me? A lot of records made at studios, a lot of music heard, 100+ pieces of equipment designed. Humans use ears to hear and perceive sound, not Audio Precision. But I do the routine design/listen/measure/correct/listen/measure. Most designers do this but not all.

If you still have the BB2, plug it in and turn the HAT on and off when playing music. Tell me HOW do you hear/perceive these extra 12dB of 2nd harmonic.

Sure, back in tube days with very high distortions, some people opined that this may be the case but even that was never verified in controlled testing.

You see Amir… You make absolute statements like this that just show that there is this whole audio word you are just not part of or you maybe willfully ignore.

It’s not like we were all lost in a dessert and now you showed up and enlighten us with this incredible SINAD. If you try to be “scientific” yet ignore and don’t investigate counterclaims, you’ll end up being ignored while we all move on.

Ultimately the story you tell is the same story we hear from every expensive audio gear manufacturer that measurements show to be poor performing. Why should anyone believe your story instead of theirs? How can you prove your claims? Are you asking that we just accept those claims because you said it???

Yes, you hear the same thing from all of us because of your simplistic approach followed by real arrogance of your forum presentation system. Your forum architecture and policies is the real problem. News of my post here already made it to the trolls on your forum. Who’d want to be a part of this?

You see, that is the beauty of measurements. You can touch and feel them. You can verify them. They are reliable facts. Not assumptions, “everybody knows,” self-serving opinions, etc.

There is a whole studio engineering/ audiophile vocabulary. Like, a soundstage, tight bass, smooth top, clinical sound, lifeless sound, groovy, musical etc.

You may dismiss these as subjective, but unfortunately this is how the records are made! Recording Studio stuff doesn’t talk Sinad. They talk music.

Now a real challenge is to correlate the two: for example does the -95dB of second harmonic makes DAC groovy? Yes! Is this good? 9 of 10 people would agree. Is the linear power supply responsible for tighter bass? Yes! Most audiophiles would agree.

This is not some mystical, “subjective” , “unscientific” but a clear daily vocabulary of recording professionals and audiophiles that clearly describes the main sound character of a give piece of equipment. We use it every day and perfectly , precisely understand the meaning.

We use one microphone for vocals and another one for piano. Why? because they sound different Recording is art aided by science, not the other way around.

Your measurements, do not. They are a tool that’s useful in design but it’s just a simple tool. Using it for judging the sound quality exclusively is like peeping into Carnegie Hall through a key hole.

But for some people it can may take years to understand the complexity of sound.

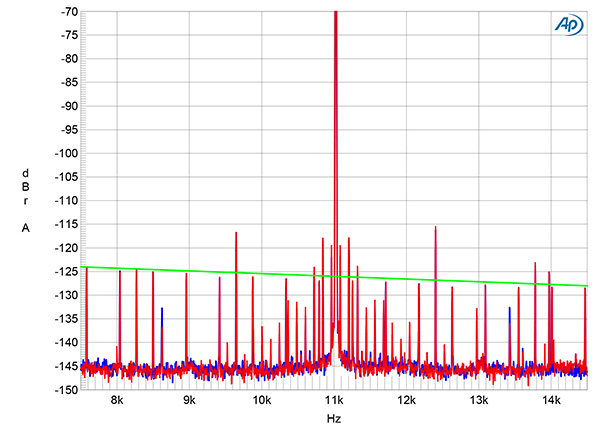

But let’s say you are right. What possible value comes out of all the noise and interference show in tests that didn’t exist 70 years ago but are clearly visible in your product?

It may be visible- but is it audible? And how?

If you were now taken the next step in correlating the measurements with what you heard and said for example: “I believe that the presence of this noise here results in me hearing this or hearing less of that” I would then have the whole new level of interest in your work.

But when you routinely continue to do what you do now, it’s simply boring, divisive, and I’m afraid harmful to starters in hifi.

I could now say that it is maybe the ASR who misleads people into buying the wrong equipment, while they could have otherwise maybe really enjoyed a nice tube amp or a turntable.

After all we listen to music for feelings of pleasure which are “subjective” .

It’s like food, some people like fat and some people like lean.

Sincerely, Michal at Mytek