Question… and I think the answer is going to be “try it and report back”, but I figured I’d see if anyone had any ideas.

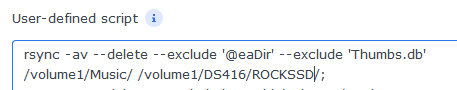

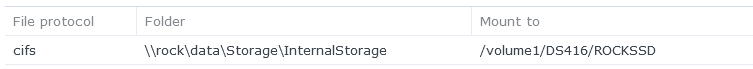

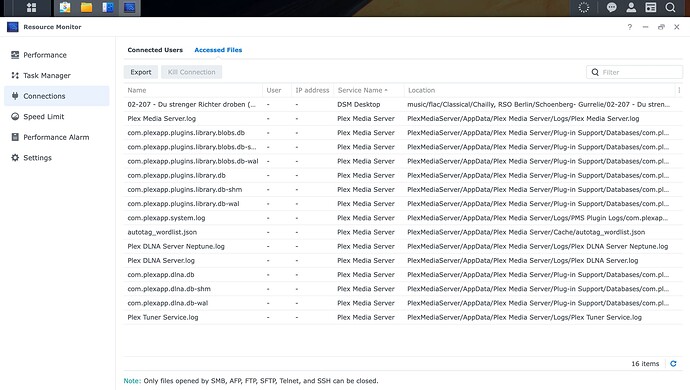

Currently my way of getting files from my NAS music master to my 2 cores’ USB-attached SSDs is the task scheduler rsync jobs listed above. One is local, and I have it run hourly. The other goes across a VPN tunnel to another house. Both are set up via remote CIFS mounts on the local Synology, so the remote house’s job looks like:

rsync -av --delete --exclude '@eaDir' --exclude '#snapshot' --exclude '#recycle' --exclude '.DS_Store' /volume1/music/ /volume1/homes/admin/RemoteRock/1_44_1-42218_SSK_DD5641988763D_c57f2da1-6301-49bc-6299-3841eef904e3-p1/music

(Again, RemoteRock is a CIFS mount of a NUC in another state that has a local IP address through an OpenVPN tunnel)

They’re both on different companies’ cable plans, and have guaranteed upload speeds in the range of 20Mbps. I currently get more like 7Mbps (measured at the Unifi console, and it’s much less in reality - it took 9 days for my 600Gb music share to traverse the connection (I was stupid and didn’t start the copy locally, but once it was going I was lazy and decided to see how it did). I’m sure there’s a bunch of overhead, and I’m sure there’s a bottleneck here or there. Mostly it’s fine. It works, which is amazing. I’m never adding more than 3-4 albums a day, and that is infrequent. So I should probably leave well enough alone.

But… I also have a crappy little Synology ds220j that sits in my Remote House that I use as a Hyper Backup Vault for important stuff on my primary home NAS. So I have backup rotation etc in case my house burns down or a guest goes into my primary home server closet and takes my DS918+ and drop kicks it.

But what I’m wondering is whether I should mount the remote rock via smb on the remote synology and then use the syntax more like rsync -av /volume1/music/ rsyncuser@192.168.10.232::/volume1/mounts/secondhomerock/1_44_1-42218_SSK_DD5641988763D_c57f2da1-6301-49bc-6299-3841eef904e3-p1/music instead, relying on the fact that the second home synology can act as an rsync daemon.

In other words, is there an advantage in speed / reliability to allowing the CIFS mount to be a local one and have rsync traverse the tunnel, or should I keep it like it is where it’s the CIFS mount that is traversing the tunnel and rsync is doing all its work within the local synology? (Sorry if I’m not explaining exactly with right words, hope this is clear)

Also, note that I’m not encrypting anything over rsync because I’m relying on my site-to-site VPN as my source of security. I rotate that password periodically and try to assume that that can’t be a worse vulnerability than a frontal attack on either home’s Unifi IPS detection, firewall, ARC port etc).

Thanks for any thoughts. Never thought I’d be trying to figure stuff like this out for myself.