Is this the guy who compiled the same firmware code several times with no changes, and god different SQ each time?

Maybe I’m a bit of a rebel but when my mother told me not to touch the hot plate when I was young because it was, well, hot. I had to see for myself. She was right.

This is what €100 worth of data and power cabling looks like:

I just took delivery. Looking forward to A/B-ing the lot.

Sounded a bit odd to me, unsettling like I couldn’t quite work out where everyone was. So I swapped phase. Zelmani’s vocals immediately locked center-stage on my system. The separation of instruments is also much more obvious. The ghost vocals, also, at 1.10 are much less ‘subtle’. They are straight out of the left speaker and completely separated from Zelmani. I would have thought it’s a recording mistake. Probably Zelmani’s guide vocals bleeding through the mike on the left channel when they did the piano. I tried a few other tracks on that album as well. And I would have the same comments. Not entirely convinced swapping phase for that album is the best idea though because I’m not sure I like the much more in your face ‘hi-fi’ sound that you get. You have to decide. I was unfamiliar with this Swedish artist though and do like the album so I thank the OP for that.

I see you went for the real audiophile noise-rejecting jitter-correcting audio-prioritizing anti-phase-shifting triple-twisted cables there @RBM

Its at time 1:11, where Sophie sings almost like an echo, repeating what she said in the center voice. So chorus is a little misleading.

The ghost voice is very exactly positioned (7 dm to the right of the right speaker) in my system and room, so I don’t think its a miss in recording. And yes, its a very nice artist, her first 6 or so records are very good, the following ones are not bad but not as good.

Note that you probably need a good room acoustics to hear this effect. But for me its a nice little detail since it reacts to very small changes in the system.

Swap the phase and see what you think. It’s easy to do. Swap the speaker cables or pre-amp interconnects.

I can do it on the dac (Width set to -1), but no difference except the obvious ones. But not to be nitpicking, but that is a channel swap, phase swap (like changing absolute phase) is something else.

Understood. Still sounds like a recording mistake to me. Maybe there are some recording engineers following this thread.

There is a ‘live’ much lowerfi youtube version I much prefer. It doesn’t have the ghost vocals and sounds much more natural to me.

https://www.youtube.com/watch?v=OMhgdZiuGbg&list=RDOMhgdZiuGbg&start_radio=1&t=0

The ghost vocals effect is also very obvious on this lowfi youtube studio version (a bit later @ 1.18). But to my ears anyway the whole album is oddly recorded and this particular ghost vocals effect is just exaggerated on my main system. I certainly wasn’t motivated to rip out my ethernet network to see if I could hear the effect more clearly. I don’t know why I find the album oddly recorded. I think it is probably because it sounds like all the artists are in their individual booths but I am probably imagining, I am no expert. We have very good acoustics at home. The business end of the system are B&W 802 Diamonds and an ancient Mark Levinson 27.5 I haven’t been able to part with although various other amplifiers periodically pass through the system.

Probably the best sounding lamps in the world . . .

The conversation has just become moot.

From the website -

" IRIS dramatically improves sound quality while simultaneously activating the brain. This is Active Listening."

‘Fit for purpose’ is the correct terminology, I believe…

Great minds . . .

@Janne_Johansson I don’t disagree with you - I did say earlier, while my world was round, there are a few flat spots - this may well be is one  , but I have my reasons.

, but I have my reasons.

Many years ago I worked for one of the pioneering US companies in packet traffic shaping. Our product could take any LAN traffic stream that was part of a multi-packet traffic flow (file transfer, voice call etc.) and a) guarantee the bandwidth b) shape the traffic to produce a near constant bit rate when viewed from a packet/time perspective, and c) drive up network efficiency because of these optimizations - it was really quite amazing. At a customer install I was asked to troubleshoot why VoIP calls sent over a low bandwidth WAN (128Kbps) would occasionally suffer about a second of distortion at random times. At first pass, this couldn’t happen, as we always scheduled the VoIP packets to be immediately forwarded on receipt and in advance of any other queued packets from non-real time traffic. However, it was happening, and we tracked the issue down to being a case when a VoIP packet arrived just as the router started to send a low priority 1500 byte packet, from a different queue, over the same low speed WAN link. The VoIP packet would be queued behind the low-priority packet as we didn’t cut packets off mid transmission - so everything was working as designed. But, because the bandwidth of the WAN link was so low, the 1500 byte packet took a meaningfully long enough time to send that it caused the VoIP player/codec to underrun and that created the audio artifacts. The fix? Fragment large packets to ensure the availability of bandwidth when needed for the real-time traffic - basically we turned an IP router in to an ATM like switch on the low speed links. What was interesting was all external measurement looked the same, but that scheduling nuance was a real audible problem. As you probably know, fragmenting packets is undesirable as it puts work on the receiving node to reassemble them - which is a parallel of the point you make about RT kernels negatively impacting other things, you are right, its a trade-off against priorities. And, this example is obviously real-time audio over a network, so this specific issue wouldn’t/can’t exist in streaming, so why is it relevant? At the point the audio signal is being passed to the DAC chipset, or via a synchronous connection, scheduling is critical and is irrespective of whether its streaming or not. Also, the OS and the make up of an SOC or CPU/Motherboard act like networks, and as its desirable for DAC chips to have small buffers, DACs are suseptable to timing issues exposed in those “PC networks”, so at an OS/bus level, the example has some relevance. This is my round earth experience!

And this is my flat earth - I have also found that the less “work” I require audio systems to do, the “easier” or more relaxed the sound. Entirely subjective. I’ve rationalized this to being the less processing, the less correcting, i.e. the less strain, the more natural everything feels - i.e. the more I have each component operate in it’s sweet spot the better it sounds. I can’t measure it, but I believe I can hear it. Its like the micro version of speakers that are too small or too big for a room. Somehow it doesn’t sound right, they’re operating outside of their sweet spot to operate within the sweet spot of the room and listener or vice versa - either way, something has to do too much work.

So yes, pragmatically, for probably 99% of situations, your guidance is much better than mine, virtually no one needs to waste time tweaking modern OSes, on modern well designed hardware to get great sound, and I think this was @Bill_Janssen’s point as well: assuming the streamers are fit for purpose, well engineered and part of a similarly well engineered system, all streamers should sound the same - so I predominately agree with you both. But, in those esoteric situations, when every other optimization is there, my “working in the sweet spot” tenet makes me believe that there may be some small fraction of a percent of benefit that can be realized by removing that “coincidental” misalignment, and that that just helps makes everything work that little bit closer to its sweet spot, with the equivalent fraction of a percentage improvement in the sound’s ease, and thus perceived quality. Its like a top athlete tuning every aspect of their life for performance - the British cycling team used to take their pillows with the to hotels, because that fraction of improvement in sleep, had a discernable positive impact - when they were at their peak, but probably not for the rest of us. And so, it is a “may be”, not an “always”, even in my roundish/flatish earth mind.

Enjoying the discussion, thank you.

I definitely agree with this, but I have another explanation: all electronic “work” generates electronic noise, so less work leads to less electronic noise. This is true for CPUs as well as network related things like error correction for packets (re-sends). And one of the biggest bad-guy is switched power regulators, which tends to produce a lot of electronic noise (at least the cheap consumer grade stuff).

In my opinion, the number one thing you notice when you decrease electronic noise is precisely what you say: an easier, more fluid and relaxing sound (less digital sounding).

This is something quantifiable.

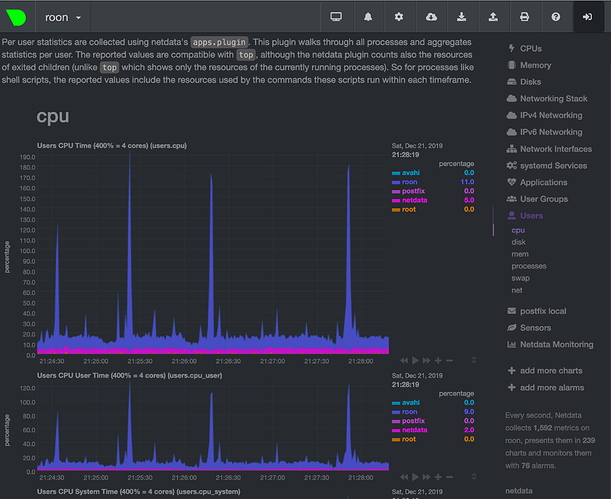

Let’s look at my Roon Core’s CPU usage.

See those nasty-looking spikes? They occur at the start of every track (including during gapless playback) and last a few seconds (shorter if playing a local file, longer if playing a track from Qobuz).

As you can easily verify, the same spikes in CPU usage occur at the start of every track on your Roon Core as well.

Have you ever noticed the difference (less “fluid and relaxing sound”) at the start of a track?

No, I haven’t, but my computer and HiFi is totally isolated from each other (fiber, different fuses, etc). I can play a CPU and GPU intense game on my computer, and Roon with HQPlayer playing music, without noticing any decrease in sound quality.

But I think this is the main reason why Roon suggests to separate core and endpoint, and its also the reason why USB directly from a general purpose computer to a DAC usually don’t sound to great.

Nope, I bought Roon so that I could explore my music library in meaningful ways that were previously nowhere near as rich. The jury is still out on whether Roon has lived up to it’s promise though but at present the nay’s have it.