So I saw this got reposted over on head-fi (where I do not have an account), so I figured I’d give a bit more background here. Sorry for the wall of text…

Wi-Fi transmission rates have three main factors that determine their maximum theoretical bandwidth: channel width, symbol encoding scheme, and stream count.

On 2.4Ghz, channel width is fixed at 20Mhz. (Use of 40Mhz on 2.4Ghz is considered very bad neighborship – the only practical real-world usage is for point-to-point wireless links using highly directional antennas such as a Yagi.) On 5Ghz, wider channels are supported. 802.11n supports 40Mhz channels. 802.11ac and 802.11ax support up to 160Mhz channels, but that’s not really practical in the real world, except for the afore-mentioned point-to-point links. Realistic maximum is 80Mhz channels, which can work OK in the home where you typically have low density deployments. (But keep in mind density is not affected by just your kit, but all your surrounding neighbor’s kit as well.) For enterprise, channel width is usually restricted to 40Mhz, and even restricting everything to 20Mhz is quite common for mid to very dense deployments (e.g., stadiums).

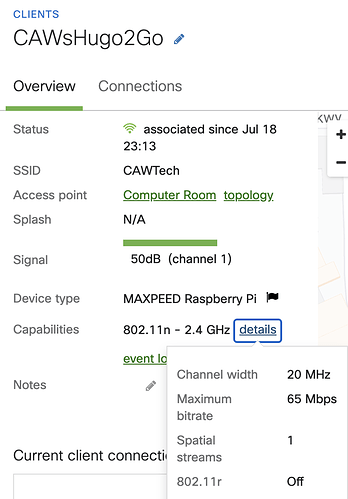

The symbol encoding scheme is a variable sliding scale, referred to as the “MCS index”. The client and the AP each exchange frames indicating their max supported index. (The index actually encodes both the symbol encoding scheme and the stream count, but don’t worry about that.) They then attempt to run the connection at the maximum rate both support. For 802.11n, that would be 64-QAM encoding with 400ns guard interval, which gives a max possible rate of 72Mb/s per 20Mhz channel stream. The 2Go advertisement of 65Mb/s indicates it does not support the top MCS index, instead one down. Now, I keep mentioning “theoretical maximum”, because in the real world, you will almost never communicate at maximum signal rate. The client and AP are constantly re-evaluating the symbol encoding selection to maximize bandwidth as RF conditions change. And change they do – constantly. There is so much interference from other devices, wall penetration reducing single strength, multipath reflections, etc., that your average real-world rates are often only half the theoretical max, and can frequently be MUCH WORSE. It’s not uncommon to see single-stream 2.4Ghz top out at around 20-25Mbit/s. And that’s BEFORE you factor in Wi-Fi is a shared, half-duplex medium and other devices on your network will steal time slots, reducing those rates further. Hence why 384k PCM, which is ~25Mb/s continuous, is going to be extremely unreliable on a single-stream 2.4Ghz channel. And the ~50Mb/s of a 768k PCM (or DSD512) is a complete and utter fantasy. (While not directly relevant here, since the 2Go is an 802.11n device, 802.11ac and 802.11ax introduce more modern encoding schemes capable of even higher symbol rates within the same channel width.)

The third factor is concurrent spatial streams. Wi-Fi is based on spread spectrum technology, whereby the signal is sent on different frequencies within the channel using a mapping pattern such that the energy is “spread” across the spectrum. Using multiple radios (each with their own antenna), with their transmissions offset in the spreading pattern from each other, you can effectively transmit multiple “streams” at the same time within a single channel. There are practical limits to this, however, both in physical terms (e.g., you need separate radios, antennas and sufficient power for each stream) and electromagnetic terms (e.g., they will interfere with each other if there is not enough spacing between them, making the signal unrecoverable at the receiver). 802.11n supports a maximum of four streams. In practice, only access points support the full four streams. Most client solutions were 1x1 (single receive, single transmit – typically used for IoT devices and other low bandwidth/cheap/etc devices), 2x1 (2 receive, 1 transmit – typically used by cheap to mid tier laptops where download is more important than upload), 2x2 (high end laptops) or 3x(2|3) (extremely rare, very high end laptops – plus some PCI Wi-Fi cards for fixed-in-place desktops).

As you can see, reported by my Meraki (==enterprise oriented kit), the 2Go only supports a single stream. This means real-world it will get max data rate of around 20-25Mb/s, assuming “normal” interference levels and NO OTHER DEVICES on the 2.4Ghz channel consuming anything more than trivial amounts of bandwidth (e.g., your kid firing up a 2.4Ghz-only phone or laptop and streaming Disney+ at the same time will absolutely kill you). 192k PCM (~12.5Mb/s) could work, but is likely to have hiccups. 384k PCM (~25Mb/s) MIGHT work when the stars are aligned, but is more or less doomed. And as said before, 768k (~50Mb/s) will never work.

Had Chord used a dual stream solution, the’d have bought the 2Go a bit of headroom such that 384k would probably work most of the time, and 768k could work when the stars are aligned, but would largely be unreliable. Better yet to have used a dual-band, dual stream solution, as then you end up with four times the real-world bandwidth of the solution they implemented. Even 768k could work then, though it would probably still suffer hiccups. Big advantage to 5Ghz is way more channels, too, so much less interference from your neighbors/etc, making for a far more reliable signal (=higher real-world symbol rates on average).