It’s a little known fact that Bob Stuart is really Kevin Crumb and Chrislayer is the goto personality when MQA is attacked.

No, that was your incorrect assumption/comprehension. A previous poster replied to Chris and referenced a pro MQA cabal of many.

Regardless, I thought you said many months ago that you were leaving because you could not stomach Roon staff criticism of your posting behavior?

Yeah, you left.

I’ve said I’m going, pretty clearly, so why this comment, a repetition of something I said I felt was highly patronising? You’ve seen me off! No more options needed.

I disagree, it’s all about one topic, probably mostly one person but certainly the anti MQA gang. Lots of the comments are perfectly innocent as I’ve mentioned above (repeatedly). Deleting these very innocent comments speak volumes, That’s hearsay but unsurprising, either tittle tattle from the anti MQA brigade-your “community” from CA or another (minority interest) site that virtually banned itself out of existence. This is a really poor phrase to use, it’s really very insulting, it could …

But, apparently, you cannot stay away. Also unfortunate that you cannot stick to your word.

AJ

Kevin Crumb

Split and Glass … both great films but then I’m quite a fan of M. Night Shyamalan work.

Going back on topic, and forum etiquette …

-

Please discuss the topic not the posters

-

Don’t get distracted in how a post is phrased …

We are not robots and should be able to understand and intelligently interpret posts …

Please rise above pettiness and self-moderate, then we [moderators] won’t have too.

No, that was your incorrect assumption/comprehension

I think I said from memory…

because you could not stomach Roon staff criticism of your posting behavior

I forgave them, I’m like that.

Also unfortunate that you cannot stick to your word

I left, I stuck to my word. Nice to know that you follow me personally, slightly creepy but hey!

Next…

So here’s some fun. 2L Records are kind enough to post samples comparing 16/44.1 MQA with the “standard” Redbook 16/44.1 version.

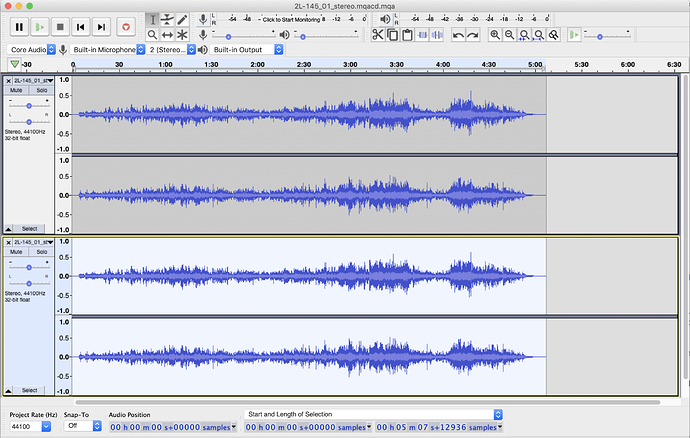

Downloading the “Hoff: Innocence” track in both formats, let’s fire up Audacity and see how they differ. First we need to time-align the two tracks (which required snipping 88221 samples from the start of the Redbook track):

Then we invert the Redbook track and choose Tracks→Mix→Mix and Render. Here’s the result:

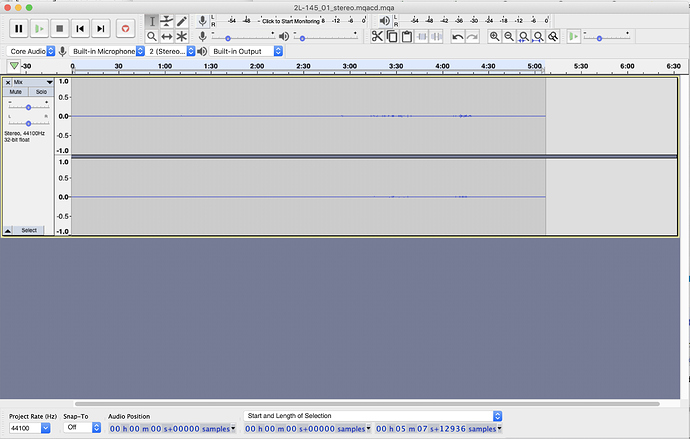

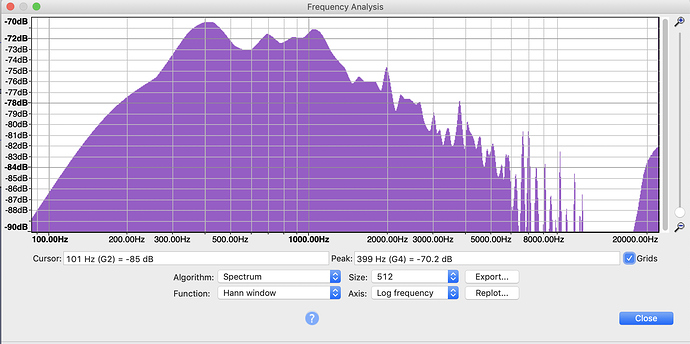

Aside from a few glitches near the end, the cancellation looks pretty good. But how good is it? Let’s pick a quiet passage and see what the Spectral Analysis uncovers:

Between 3 kHz and 16 kHz, the noise is below -96dB. But below 1.8 kHz, the noise rises rather inexorably, to -70 dB at low frequencies. I tried several different passages, and they all look similar.

It has been reported that 16/44.1 MQA has a bit-depth of only 13 bits (with the remaining 3 LSBs devoted to the MQA data). This experiment seems to bear that out.

If you took a 13 bit file and applied noise-shaping dither to it, you could push the noise down below -96 dB for part of the audio range. But you’re stuck with plainly audible noise (at roughly the expected 12bits=-72dB level) at lower frequencies, and rising noise levels at the highest frequencies (exactly as we found).

I would post the difference file here, if the forum software allowed it. But it’s trivial to recreate yourself, if anyone wants to give it a listen, or analyze it further.

N.B.: Just to be clear, the difference is equal parts “signal” (from the original Redbook file) and “noise” (from the MQA data embedded in the MQA file). What’s important here is the overall level, which is what is being plotted.

Try the seventh selection, Carl Nielsen Piano Music. It is a 16 bit 44.1 kHz DAT original recording. No ultrasonic information for MQA to fold/unfold.

And compare the three file sizes. The 80 MB MQA stereo file, which is presumably 24 bit 44.1 kHz, is highly inefficient.

AJ

Try the seventh selection, Carl Nielsen Piano Music. It is a 16 bit 44.1 kHz DAT original recording. No ultrasonic information for MQA to fold/unfold.

Not much better.

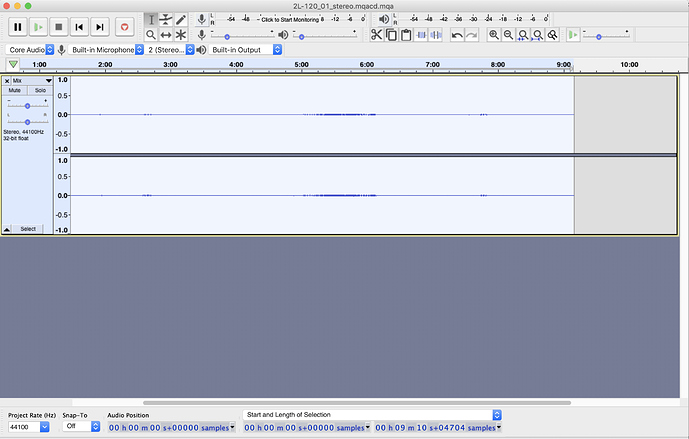

After time-aligning the tracks (required snipping 3 samples from the beginning of the MQA version), the difference looks like:

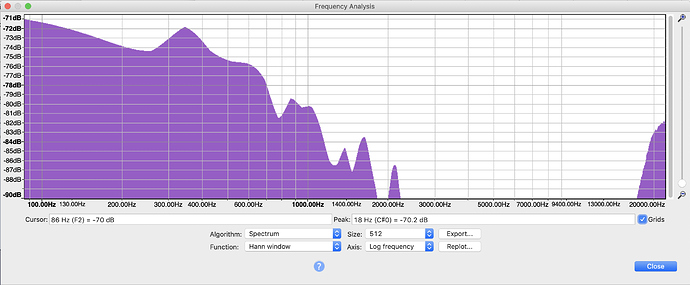

This looks worse than the Hoff track. Again, picking as quiet a passage as possible, the Spectral Analysis looks like

Looks like much less aggressive noise-shaping, but again, the overall noise level is consistent with the MQA file having a bit-depth of 13 bits.

Update: Actually, the situation is weirder than that. With the Carl Nielsen piece, the original source was 16/44.1. So you’d that “Master Quality”, if it meant anything, would mean that the n most significant bits of every sample would be the same as the original “CD” version. But they’re not!

The MQA version seems to have its dynamic range slightly compressed, and the volume boosted (so that the peak levels almost match). You can check this out for yourself using the Sample Data Export tool in Audacity.

That’s a pretty sneaky trick from the Loudness Wars…

So here’s the thing… if mqa fixed the bit perfect thing… and didn’t throw away any data… and there were hence no noise issues… would folks here still hate it? Or rather, of those who hate it, would any hate it less? Bandwidth & storage are both cheap. I feel like the “we are sending fewer bits and requiring you to store fewer bits” value proposition is a little ‘last decade’; why doesn’t mqa just say “we are embedding the potential for drm in return for managing the canonical / authoritative metadata and version control around each master”? That feels like it would work. The reality is that the publishers own the masters, and their economics are upside down from where they were 30 years ago. I’m not an apologist for the publishers; I cheered when streaming knocked them on their collective ass. But… they own the IP, and they are going to find a way to get paid. This way doesn’t bother me nearly as much as some — so long as they actually get out of the way of bit perfect. Why don’t they just make the unfolding happen with additional bits instead of hijacking potentially (notice my diplomatic use of ‘potentially’!) useful data?

The reality is that the publishers own the masters, and their economics are upside down from where they were 30 years ago.

That’s not exactly true. Because of streaming, the value of publishing catalogues is significantly higher than it ever was. Just look how much Tencent recently paid for a 10% interest in the Universal Music catalogue (it was $3bn).

Streaming has actually saved the music studios from the near-bankruptcy (remember EMI?) they faced as a result of unauthorized copying.

So here’s the thing… if mqa fixed the bit perfect thing… and didn’t throw away any data… and there were hence no noise issues… would folks here still hate it?

If the format was open and available then no. As long as I can’t open it up properly due to obfuscation, then yes, I will hate it with the intensity of a thousand suns.

I really don’t care how it sounds.

Sheldon

why doesn’t mqa just say “we are embedding the potential for drm in return for managing the canonical / authoritative metadata and version control around each master”? That feels like it would work

Never understood why MQA is done the way it is.

I would have thought the best approach would be to MD5 the audio file and store the hash in a database that people (i.e Roon & Tidal etc) could then lookup to validate the authentication.

Given the flexibility of blockchain (and it’s pretty much open source) this might be another option.

Never understood why MQA is done the way it is.

I would have thought the best approach would be to MD5 the audio file and store the hash in a database that people (i.e Roon & Tidal etc) could then lookup to validate the authentication.

Given the flexibility of blockchain (and it’s pretty much open source) this might be another option.

What is the threat model this “authentication” is supposed to protect against? That some intermediary modifies the file in transit? Really?

The only thing I see it preventing is the end-user applying DSP — which I would have thought was a desirable capability.

I thought the idea of MQA was to prove that the audio file has come from a legitimate source i.e a rip from the master validated by the studio or artist.

As opposed to … ?

Being some crappy upsampled 16/44 file being sold as hires I suppose.

Personally I see no use for MQA but I’m just trying to understand why MQA seems to be a stealth DRM that forces manufacturers into paying MQA (Meridian) for licensing to use on DACs or playback devices when their claim is that it is to validate the authenticity chain of the recording?

I’m trying to think if there’s anything on this Earth that I hate with such intensity

Marmite…

You’ve come closer than I thought possible (with apologies to our Antipodean friends), but not even marmite.

I just feel sorry for the people that are disappointed by MQA.

Bob Stuart is just laughing at you guys.

MQA or not, you will never have the crown jewels.

You better listen to the song by George Harrison - All Things Must Pass

and sing “All things must pass awaaay”.

Whatever other MQAs intentions are, their stated intentions are to provide the so called Master Authentication or provenance for each MQA file, and to provide a better sounding file in a smaller file size. Have they accomplished their stated goals? Many people think so, many people don’t. Are these reasonable goals? Same as above. Ad infinitum.

I just think that the “smaller file size” attribute is both less useful / appealing to consumers (both bandwidth and storage are cheap, reliable and rapidly becoming cheaper and more reliable in most places - with due apologies to friends in some places where it’s not dropping as fast as in the US). To continue to say “we’re doing this amazing thing by dropping streaming bandwidth” is no longer a valuable feature. They should give that up. If they can in addition find more information which isn’t available in the HiRes file (somehow) and carry it alongside, then that would be awesome. But I don’t know what that information is. I think somehow the USP got set a while back, and now we’re in a place where the primary marketing claim (“Look at the magic we can do in a smaller file size / stream”) has a major compontent that is kind of unimportant because “smaller file size / stream” is basically irrellevant. If they can create provenance authenticated content, fabulous. If they can create better sounding streams / files, amazing. But to make the case that they are somehow constrained and need to pass all this information in a narrower bitstream is on the face of it absurd. If they let go of that, the business model would be much cleaner.