xxx

March 16, 2018, 11:34pm

22

You can SSH from the Terminal app on your Mac. The form is “SSH userid@IP address”, e.g. MYID@192.168.0.13 . Once you’re in, it’s like being on the machine. Super easy.

BTW - SSH was originally a Linux command, i.e. macOS, also. In order to do it in Windows one needs an extra piece of softrware.

Thanks for that Slim!

After running the utility QNAP links to above, I discovered that it takes about 3 minutes for my NAS to settle out after quitting Roon. The utility no longer reports anything to the Terminal screen or the Log it generates.

Do I post the log here, send it to Roon support or send it to QNAP support?

xxx

March 17, 2018, 2:20pm

24

Since you’re here, might as well check with @support .

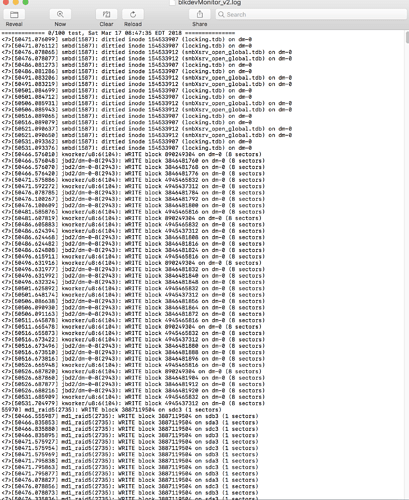

So I quit Roon, waited 5 minutes and started the utility blkdevMonitor_20151225.sh. I let it run for 5 minutes but it didn’t generate any data. I stopped the utility and started Roon with Storage disabled and waited 15 minutes for things to settle. Then I started the utility again and let it run for just 3 minutes. This is just the1st page of over 25 pages of the resulting log file for @support to review:

Hi John,

https://kb.roonlabs.com/Roon_Server_on_NAS

Have you considered applying this configuration (i.e. place your Roon core on the NAS - it works quite well for me)? The problem you describe sounds like either a grounding issue or RF noise from your NAS and/or Mac. For starters, you might consider keeping your NAS on a separate electrical circuit than your computer or playback system.

Just some food for thought (perhaps you already thought of these ideas already).

Good luck!

Gorm

Hi Gorm,

Thanks for the suggestions! My TS-431P doesn’t meet the recommended requirements. The “noise” is coming from the NAS itself as the disks are being accessed constantly while Roon is running.

@support I just sent the blkdevMonitor_v2.log file to QNAP via a support ticket. If you want it also let me know the email to send it to.

xxx

March 20, 2018, 7:41pm

29

How old are your drives. Could a drive be failing?

John_Conte

March 21, 2018, 12:40am

31

Only when Roon is running? I quit Roon and the disk access stops. The drives are probably 2-3 years old.

John_Conte

March 22, 2018, 11:29am

32

QNAP got back to me just saying to not run Roon. They did not specify what was causing all the disk access. I don’t think they want to spend resources on it.

For now, I don’t run Roon unless I want to use it.

I have exactly the same issue. Roon core on Linux Mint 18.3, watched folders for storage on a QNAP TS-453A.

The NAS used to sleep when not actively in use but over the past few months I can hear the disks being accessed every 10 seconds or so, just like the OP states.

I’m on my second year of Roon subscription. This issue was definitely not present until a few months ago.

The behavior is constant. Roon doesn’t need to be in active use (i.e playing music or scanning for new material), it keeps going as long as Roon Server is running. I can submit logs to @support if required.

Glad to know I’m not crazy or that I have some unusual set of circumstances creating this behavior from Roon.

I just restarted my NAS and noticed this pop-up on my screen:

Roon was not running at the time and had been shutdown for about 12 hours.

john

March 23, 2018, 1:52am

35

Hello @John_Conte ,

The tech team is looking over the logs generated by the QNAP, I will be sure to update this thread if we see anything that could be causing this issue to occur for other users.

-John

@John_Conte and @support I ran the QNAP utility. Here is my output, Very similar to John’s above. RoonServer running but idle.

===== Welcome to use blkdevMonitor_v2 on Fri Mar 23 06:16:55 GMT 2018 =====

============= 1/100 test, Fri Mar 23 06:17:43 GMT 2018 ===============

@support Is there any progress with this issue?

The constant writes to the NAS by Roon must have an adverse effect on the longevity of the drives.

john

April 5, 2018, 5:06pm

38

Hello @eclectic ,

Can you take a screenshot of your “Storage” tab in Roon settings? We have some NAS related fixes lined up for the next release of Roon which may help things, however I’d still like to be able to pass on as much data to the team as possible to make sure we can nail down what is going on here.

-John

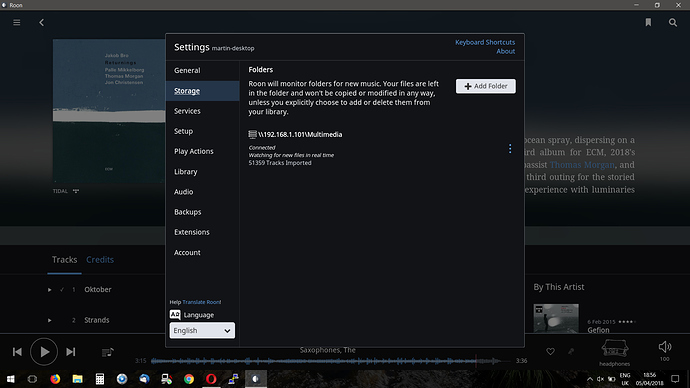

@john Thanks for the reply.

Is this what you want?

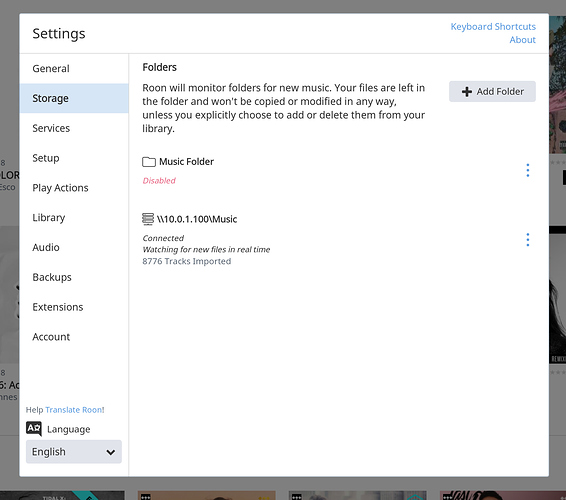

@John , here is my screenshot too. I figure more info is better.

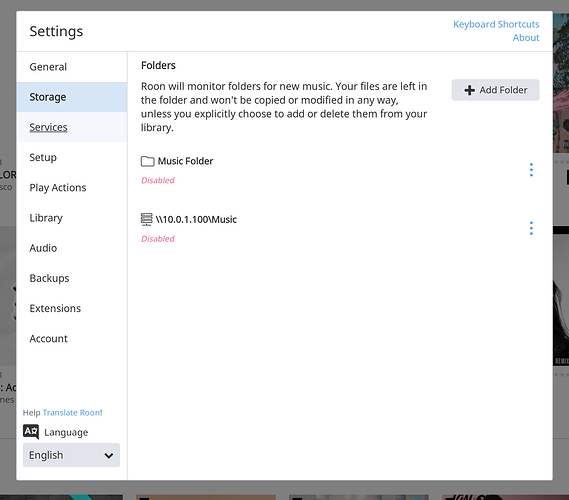

@john , as I stated earlier in this thread, if I Disable my Storage completely, the NAS access continues even if I restart Roon. Here is that screenshot:

But if I then restart my iMac and then start Roon with Storage Disabled, there is no NAS access! Perhaps this is a clue to what may be happening?