Is it pretty common for hard disk errors to cause memory leaks? Shouldn’t it be affecting other things on my server too if that is the case?

Also, sda1 is the partition on my storage SSD where my local music files are. It’s not the OS disk (nvme drive) so I don’t think reinstalling the OS will do anything to the errors.

root@nuc:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 16G 0 16G 0% /dev

tmpfs 3.2G 4.4M 3.2G 1% /run

/dev/nvme0n1p2 23G 5.3G 17G 25% /

tmpfs 16G 4.0K 16G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda1 1.8T 999G 742G 58% /mnt/storage

/dev/nvme0n1p5 1.9G 6.4M 1.7G 1% /tmp

/dev/nvme0n1p3 9.2G 3.3G 5.4G 38% /var

/dev/nvme0n1p6 193G 26G 158G 14% /home

/dev/nvme0n1p1 511M 18M 494M 4% /boot/efi

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/7e4b7bc557d6dbebe65848a8e647c81480fa8607e8f81e7e127051b37004096a/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/b99eec5fc2214896f6f23a788607149f579d217c15c6c11e996677c031313c2f/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/505205bf611016752431128729b71ffbc2f13139f53f7ddcb7ed6b77befd7f3a/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/25f374918223be019b25f35f21a053d8b4b455ddaada0259437c215bd85c78cc/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/6b47ff466d400750ad8cd9dffb86e415426a3f6b2cfc81e43befdf4fbb744304/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/dfd78587070db6673a0b6c32e6cb38cc2b3a00ffd8cb272252f473a7cb96730e/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/bc93535b62129f20b981d88af9990350a47ba40e9fc6a6f5702f4e209f1b2871/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/8c75355d40afdac24635870bc92fae92927b02e020718c1ec63887ea85fba062/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/71c1393dd3d2b40e72bc5c6081d1123c9635d2fcd012d58b39e7727f8f38d80b/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/8eb664d4fcce3c4afe32e302a13763884dbc170369c3bb8d14b0a21dfe26c55c/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/c9ce4eb7c674ebc8fcfae7f629c8c8097d3600603668d7655d0b2e6834343cbd/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/ca999edab1cd3beb0bbd53795f02e6c768ee17108f5831ce79c978f20df0f178/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/73034525e50a1794c6ea849b0a1e595cca9ceaf592243c2f969025eb39a0653a/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/97a5e015299c33a497f3d4649333ea0981abb49f7f60b2646b879c894f15273b/merged

overlay 193G 26G 158G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/a0b81d42d80ede5627b0f29f9352c9664ae8e3a33791269903188060a9ff2a85/merged

tmpfs 3.2G 0 3.2G 0% /run/user/1000

synology.home.arpa:/volume1/data 104T 65T 40T 63% /mnt/data

My experience only is.

Once you start seeing errors somewhere, it will snowball.

If the base OS isn’t stable everything depending on that OS can’t be predictable.

I understand that. But we’re not seeing OS errors here, are we?

I’ll go back to reading along.

If the sda drive only holds music files, I’d simply disconnect it and see if Roon starts up and runs without memory problems. That your Linux reports problems with sda seems to be clear, and what effect that may have on the working of Roon remains to be tested. In any case, sda should be revised and probably replaced.

I wasn’t trying to be offensive. I just want to base my next course of action on facts. If any of the logs point me to the OS being corrupted or anything, then I’m all for fixing that. But if the logs are pointing to the storage disk (which only holds my local music files) having errors, then reinstalling a whole OS won’t really do anything to solve those errors.

Yes, that makes sense and this will be my next course of action.

EDIT: I already ran fsck against /dev/sda1 and fixed a couple of corrupted directories. I’m guessing this is because there are lots of albums in there that are in Chinese. But anyway, I have it clean now:

root@nuc:~# fsck -y /dev/sda1

fsck from util-linux 2.36.1

e2fsck 1.46.2 (28-Feb-2021)

/dev/sda1: clean, 1113099/122101760 files, 270035392/488378385 blocks

I still kept it in an unmounted state for now and see how run the Roon Core behaves without the drive.

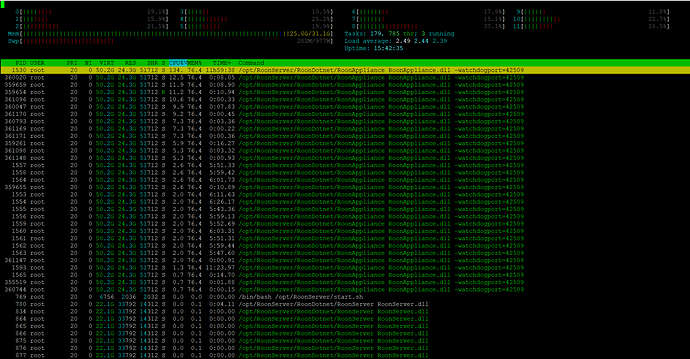

I’m not seeing any changes to the RAM usage after I unmounted the /dev/sda1 partition from the system. I’ll post another set of logs when the OOM killer kicks in to kill the roonserver service.

root@nuc:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 16G 0 16G 0% /dev

tmpfs 3.2G 4.1M 3.2G 1% /run

/dev/nvme0n1p2 23G 7.0G 15G 33% /

tmpfs 16G 4.0K 16G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/nvme0n1p3 9.2G 4.1G 4.6G 48% /var

/dev/nvme0n1p5 1.9G 6.3M 1.7G 1% /tmp

/dev/nvme0n1p6 193G 25G 159G 14% /home

/dev/nvme0n1p1 511M 18M 494M 4% /boot/efi

synology.home.arpa:/volume1/data 104T 65T 40T 63% /mnt/data

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/25f374918223be019b25f35f21a053d8b4b455ddaada0259437c215bd85c78cc/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/c9ce4eb7c674ebc8fcfae7f629c8c8097d3600603668d7655d0b2e6834343cbd/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/8eb664d4fcce3c4afe32e302a13763884dbc170369c3bb8d14b0a21dfe26c55c/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/6b47ff466d400750ad8cd9dffb86e415426a3f6b2cfc81e43befdf4fbb744304/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/505205bf611016752431128729b71ffbc2f13139f53f7ddcb7ed6b77befd7f3a/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/b99eec5fc2214896f6f23a788607149f579d217c15c6c11e996677c031313c2f/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/dfd78587070db6673a0b6c32e6cb38cc2b3a00ffd8cb272252f473a7cb96730e/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/ca999edab1cd3beb0bbd53795f02e6c768ee17108f5831ce79c978f20df0f178/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/73034525e50a1794c6ea849b0a1e595cca9ceaf592243c2f969025eb39a0653a/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/a0b81d42d80ede5627b0f29f9352c9664ae8e3a33791269903188060a9ff2a85/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/35b60600b6e354127b36965bb2930545fc4356cd7d9e464713fd7122b9a9250e/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/71c1393dd3d2b40e72bc5c6081d1123c9635d2fcd012d58b39e7727f8f38d80b/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/97a5e015299c33a497f3d4649333ea0981abb49f7f60b2646b879c894f15273b/merged

overlay 193G 25G 159G 14% /home/kevindd992002/docker/var-lib-docker/overlay2/8c75355d40afdac24635870bc92fae92927b02e020718c1ec63887ea85fba062/merged

tmpfs 3.2G 0 3.2G 0% /run/user/1000

@danny @noris The OOM killer just killed the process a few hours ago and here’s a new set of logs:

Again, sda1 is unmounted here so you should not see any errors related to it. The last error I saw in /var/log/messages for /dev/sda1 was yesterday before I unmounted and ran fsck against it:

Apr 14 21:20:18 nuc kernel: [184845.834857] EXT4-fs (sda1): last error at time 1647358208: ext4_empty_dir:3005: inode 95815045

If you grep “nvme” (my OS disk), you shouldn’t see any errors too:

root@nuc:~# cat /var/log/messages | grep nvme

Apr 11 04:57:11 nuc kernel: [ 2.021043] nvme nvme0: pci function 0000:3a:00.0

Apr 11 04:57:11 nuc kernel: [ 2.030490] nvme nvme0: 12/0/0 default/read/poll queues

Apr 11 04:57:11 nuc kernel: [ 2.033344] nvme0n1: p1 p2 p3 p4 p5 p6

Apr 11 04:57:11 nuc kernel: [ 3.604254] EXT4-fs (nvme0n1p2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 04:57:11 nuc kernel: [ 3.905338] EXT4-fs (nvme0n1p2): re-mounted. Opts: errors=remount-ro. Quota mode: none.

Apr 11 04:57:11 nuc kernel: [ 4.065875] Adding 1000444k swap on /dev/nvme0n1p4. Priority:-2 extents:1 across:1000444k SSFS

Apr 11 04:57:11 nuc kernel: [ 4.107595] EXT4-fs (nvme0n1p5): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 04:57:11 nuc kernel: [ 4.123826] EXT4-fs (nvme0n1p3): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 04:57:11 nuc kernel: [ 4.216924] EXT4-fs (nvme0n1p6): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 20:02:49 nuc kernel: [ 1.397951] nvme nvme0: pci function 0000:3a:00.0

Apr 11 20:02:49 nuc kernel: [ 1.412712] nvme nvme0: 12/0/0 default/read/poll queues

Apr 11 20:02:49 nuc kernel: [ 1.415762] nvme0n1: p1 p2 p3 p4 p5 p6

Apr 11 20:02:49 nuc kernel: [ 2.971730] EXT4-fs (nvme0n1p2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 20:02:49 nuc kernel: [ 3.250802] EXT4-fs (nvme0n1p2): re-mounted. Opts: errors=remount-ro. Quota mode: none.

Apr 11 20:02:49 nuc kernel: [ 3.406679] Adding 1000444k swap on /dev/nvme0n1p4. Priority:-2 extents:1 across:1000444k SSFS

Apr 11 20:02:49 nuc kernel: [ 3.439309] EXT4-fs (nvme0n1p5): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 20:02:49 nuc kernel: [ 3.440938] EXT4-fs (nvme0n1p3): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 11 20:02:49 nuc kernel: [ 3.441442] EXT4-fs (nvme0n1p6): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 12 17:59:32 nuc kernel: [ 1.396130] nvme nvme0: pci function 0000:3a:00.0

Apr 12 17:59:32 nuc kernel: [ 1.412406] nvme nvme0: 12/0/0 default/read/poll queues

Apr 12 17:59:32 nuc kernel: [ 1.415251] nvme0n1: p1 p2 p3 p4 p5 p6

Apr 12 17:59:32 nuc kernel: [ 2.955983] EXT4-fs (nvme0n1p2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 12 17:59:32 nuc kernel: [ 3.304213] EXT4-fs (nvme0n1p2): re-mounted. Opts: errors=remount-ro. Quota mode: none.

Apr 12 17:59:32 nuc kernel: [ 3.471838] Adding 1000444k swap on /dev/nvme0n1p4. Priority:-2 extents:1 across:1000444k SSFS

Apr 12 17:59:32 nuc kernel: [ 3.496214] EXT4-fs (nvme0n1p5): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 12 17:59:32 nuc kernel: [ 3.496695] EXT4-fs (nvme0n1p3): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 12 17:59:32 nuc kernel: [ 3.499688] EXT4-fs (nvme0n1p6): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 01:52:55 nuc kernel: [ 1.437639] nvme nvme0: pci function 0000:3a:00.0

Apr 15 01:52:55 nuc kernel: [ 1.453691] nvme nvme0: 12/0/0 default/read/poll queues

Apr 15 01:52:55 nuc kernel: [ 1.456548] nvme0n1: p1 p2 p3 p4 p5 p6

Apr 15 01:52:55 nuc kernel: [ 3.012376] EXT4-fs (nvme0n1p2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 01:52:55 nuc kernel: [ 3.286245] EXT4-fs (nvme0n1p2): re-mounted. Opts: errors=remount-ro. Quota mode: none.

Apr 15 01:52:55 nuc kernel: [ 3.427827] Adding 1000444k swap on /dev/nvme0n1p4. Priority:-2 extents:1 across:1000444k SSFS

Apr 15 01:52:55 nuc kernel: [ 3.466271] EXT4-fs (nvme0n1p5): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 01:52:55 nuc kernel: [ 3.468530] EXT4-fs (nvme0n1p3): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 01:52:55 nuc kernel: [ 3.470367] EXT4-fs (nvme0n1p6): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 02:14:21 nuc kernel: [ 1.382223] nvme nvme0: pci function 0000:3a:00.0

Apr 15 02:14:21 nuc kernel: [ 1.397743] nvme nvme0: 12/0/0 default/read/poll queues

Apr 15 02:14:21 nuc kernel: [ 1.400574] nvme0n1: p1 p2 p3 p4 p5 p6

Apr 15 02:14:21 nuc kernel: [ 2.967067] EXT4-fs (nvme0n1p2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 02:14:21 nuc kernel: [ 3.296254] EXT4-fs (nvme0n1p2): re-mounted. Opts: errors=remount-ro. Quota mode: none.

Apr 15 02:14:21 nuc kernel: [ 3.452466] Adding 1000444k swap on /dev/nvme0n1p4. Priority:-2 extents:1 across:1000444k SSFS

Apr 15 02:14:21 nuc kernel: [ 3.478669] EXT4-fs (nvme0n1p3): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 02:14:21 nuc kernel: [ 3.480230] EXT4-fs (nvme0n1p5): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Apr 15 02:14:21 nuc kernel: [ 3.480586] EXT4-fs (nvme0n1p6): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

Are these info enough for us to say that this isn’t an OS issue?

Hi @Kevin_Mychal_Ong ,

Thanks for the further checks here.

It is possible that something about that drive impacted the Roon database, can you please confirm, if you set up the fresh database and hold off on importing content from the sda1 drive, does Roon still have the OOM issue?

Please confirm with a small library of content not on the drive, and then after confirming try to import content from that sda1 drive and see if the system is still stable or if the OOMs start then.

@noris Just tried doing your suggestion and it did not make any difference. OOM Killer still eventually killed the service and broke it. Latest logs:

@noris do we have any updates on this?

I tried reinstalling again but this time without logging in Tidal and just adding my local music files. The issue still happened. At this point, I’ve already isolated Tidal and my local music files being the cause of the issue.

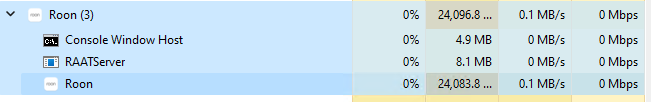

Also, I’m not sure if this matters but I also see around 17GB to 25GB RAM usage on the Roon app on my Windows 11 machine. This is another insane amount of RAM usage, not sure why it’s occurring even on a Windows machine. And this app is not running as a Core but just simply an endpoint.

Yeah, I’m seeing 5 GB (out of 8 that are installed) on my DS 918+. Since the reboot 7 hours ago. Sure seems to be an issue with the latest build. 3.5 hours of CPU time, with nothing playing on any of the endpoints.

@noris Would you mind giving me any updates here? I don’t want to come off as unreasonable but I’ve been at this for 3 weeks now and isolated almost everything that can contribute to the problem.

Hi Kevin; is this on the Windows machine really resident RAM usage, or is it rather virtual memory usage?

For sure, what’s happening on your NUC running Debian is wrong. You have shown up to 25G resident RAM usage, and of course that is completely out of the expected range. I am running Ubuntu 20.04 server on a custom machine with 16 GB RAM, and resident RAM usage is stable, fluctuating between 3G and 5.5G, and that is with aprox. 220.000 tracks.

You had an apparently failing drive holding your music files, but having it disconnected RAM usage kept as high as before. Same with a fresh Roon database…

So I wonder if you would be very reluctant to simply reinstall the OS on your NUC. At least that’s what I would do at that point, or probably would have done before now. Today or tomorrow Ubuntu 22.04 LTS will be released, and you could opt to set it up on your hardware and then install Roon server from scratch and see how it is behaving. That is done in half an hour, and as unsatisfactory as it is to not know what really is going on, it just may resolve your problem.

I suggested this 16 days ago. Minimum Debian install, nothing else but dependencies for the core, run and monitor. ![]()

Hey Andreas. I have to check the Windows machine because I’ve uninstalled the Roon app from there now, thinking it “could” be the cause of the problem in the Core on the NUC but it wasn’t.

The only reason why I’m reluctant in reinstalling is because there would be downtime for Plex and my other docker containers in the NUC. I understand where you guys are coming from and I know it’s very easy to reinstall a Linux OS but when you add the time to configure other services in the mix then it gets a bit more complicated. I also am very curious as to what could be causing the OS to be the “possible” cause of the issue.

If this NUC was just a Roon Core, I would reinstall the OS in a blink of an eye. But like other servers, they have a couple of services in them.

@danny is there any other way to move forward with this? is discussing the problem through this forum the fastest way to get the proper support from Roon?