This is a complicated topic.

Roon is written in C#. C# programs use garbage collection as a memory strategy. This means that a C# developer doesn’t write code explicit code to free memory. They allocate and use objects and then stop using them when they’re no longer necessary. Sooner or later, the “garbage collector” kicks off, figures out what objects are no longer in use, and “frees” them.

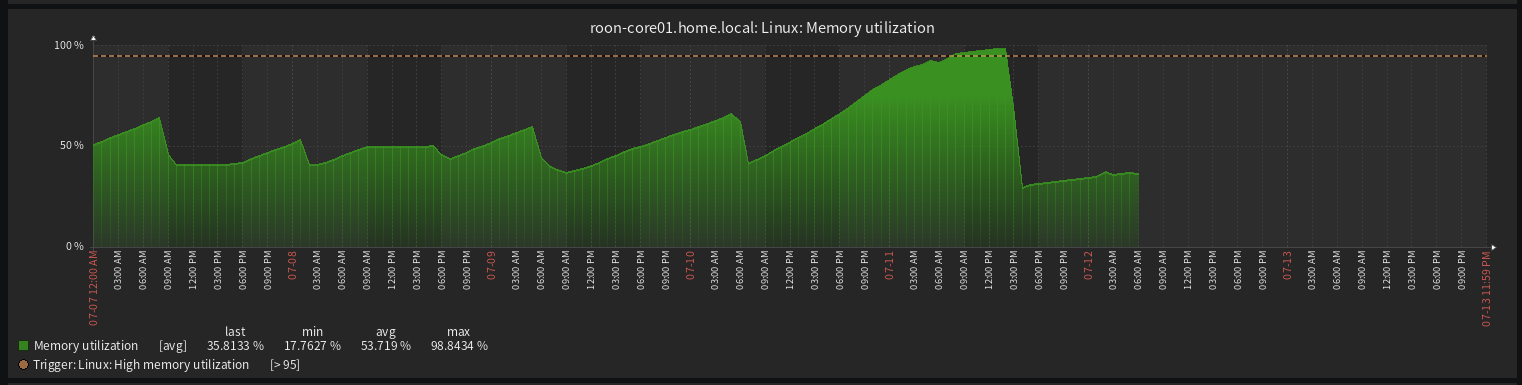

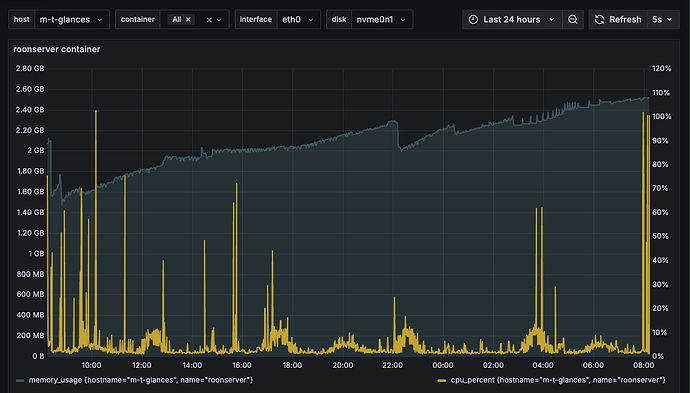

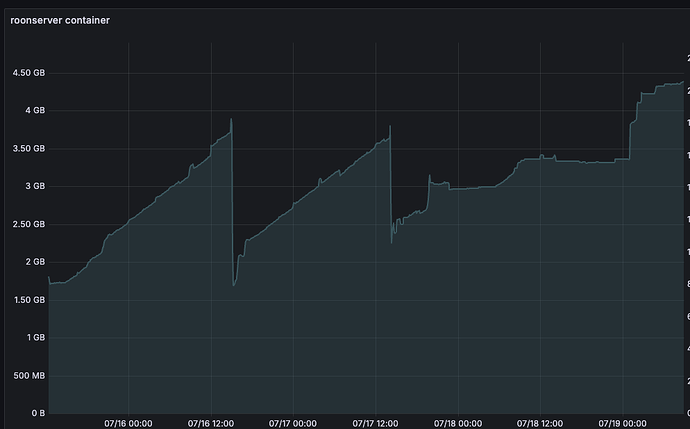

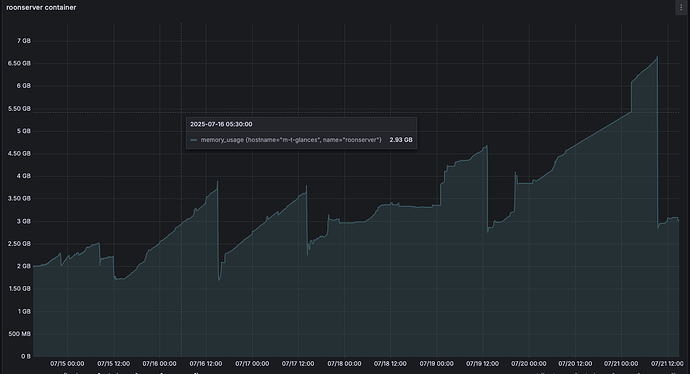

This means that in a C# program, you will always see some form of memory increase over time, then garbage collection. That’s the “sawtooth” profile that your picture illustrates.

This doesn’t mean that there aren’t memory leaks, but the pattern you show doesn’t illustrate memory leaks. A memory leak in a C# program can often show up as an increase in the low point in memory use over time. In other words, each time garbage collection happens, memory use drops. If, over time, the point that it drops to is clearly increasing, that can be a memory leak. Your picture doesn’t show that.

In my opinion, Roon’s memory usage and garbage collection are poorly tuned. Garbage collection happens far too infrequently and the consequence of this is that GC is longer running and more CPU intensive than I believe to be healthy. Because of how Roon has configured GC, this shouldn’t necessarily be problematic, but it can be.

You’ve tried adding more memory to help with the problem you’re seeing. That’s not going to help and may make this worse.

What I’m finding is that Roon runs well when it’s constrained to enough memory to accommodate the user’s database (LevelDB likes to live in RAM) and then some fixed headroom. I have a recommendation for an experiment.

Figure out how much memory Roon is using after it’s been running for 15 minutes and you’ve been playing music on the number of zones you typically play at the same time. Take that number and add about 1.5GB to it. Assign that much memory to your VM. Restart.

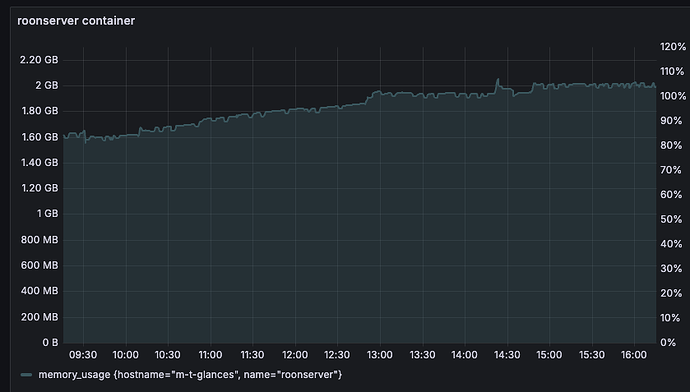

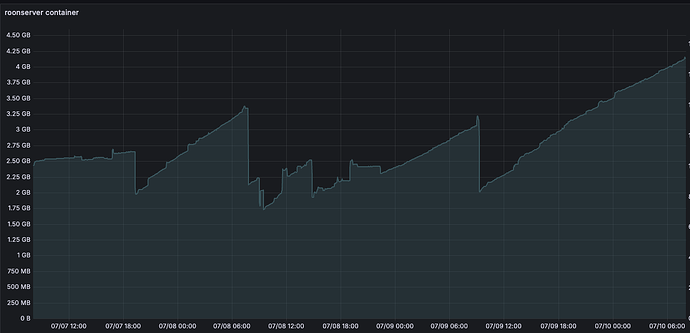

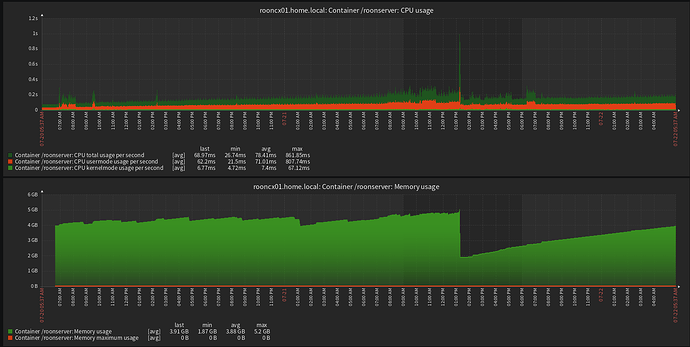

My guess is that you’ll see memory grow and drop in a way that still illustrates garbage collection but the garbage collections will be much more frequent, use much less CPU, and will be far less dramatic. I run Roon in a container. When I allow it to have a lot of memory, it looks like what you’re seeing. When I constrain it, it looks like this:

I’m very interested in learning what you see if you try this. I’m increasingly of the opinion that Roon really needs to do a deep dive on how they are configuring garbage collection.