Hi All,

It’s been just about a year since I last wrote about search, so I figured it was a good time to provide an update on what’s been going on behind the scenes.

The Roon team has been working on improving the search engine throughout 2022, and last week, we rolled out a new architecture for search. If you are running Roon 2.0, you have been using the new stuff for several days.

Previously, the cloud performed a search of TIDAL/Qobuz libraries and the Core performed a search of your personal library, then merged the results.

Now, the Core gathers potential matches for the search query and submits them to a cloud service. The cloud service then searches the TIDAL/Qobuz libraries if needed, ranks and merges the results, and returns the final list to the app.

This change will allow us to deliver better results and improve the search engine more quickly. For more information, keep on reading.

Automated Testing Tools

One of the challenges of improving a search engine is understanding the effects of each change that we make. A change that improves one search might accidentally make other searches worse. In order to make progress, we need to be able to test our search system and understand the intended and unintended consequences of each change.

We introduced an automated testing system in 2022 to help us with that. This system allows us to test thousands of searches in the cloud without using the Roon Core. Our test infrastructure will help us make sure we’re not making things worse as we continue to improve the search engine.

Shorter Cycle Time for Improvements

By moving the search engine to the cloud, we can release improvements without shipping new versions of the Roon Core and apps. This reduces the time it takes to deliver improvements to our users, and allows us to iterate quickly on specific issues when they are reported.

Metrics

While our primary goal is to make our users happy, it’s also helpful to have objective signals that show we are making things better. The new search system includes an array of metrics that allow us to monitor search results quality on an ongoing basis. This helps us see if our changes are helping, and also helps us catch any accidental issues that might degrade the quality of search results for our users.

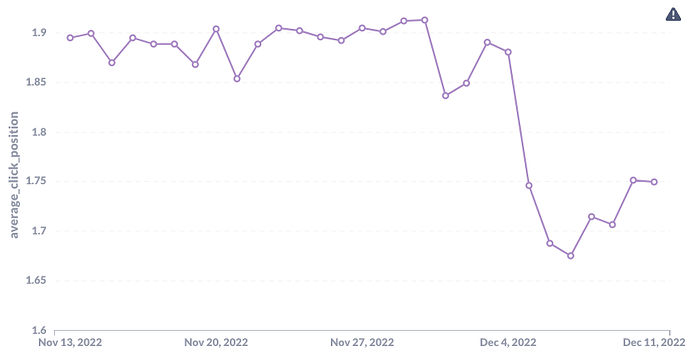

As an example, the “Average Click Position” metric measures the average position where a user clicks when they select an instant search result. For example, a result of ‘1’ means that the user selected the first item in the list.

These are the results from last week’s rollout. You can see that the number dropped quickly around the time of our rollout, which shows that our changes were an improvement.

Smarter Ranking

One of the challenges with the old search system was merging lists that had been ranked separately in the Core and in the cloud. It was difficult to compare scores from different ranking systems, so we relied on heuristics that tried to balance the ranks, popularity information, and text match accuracy.

This often led to less-than-ideal results, and troubleshooting issues was time-consuming because we had to replicate each user’s library in-house in order to see the issues. Since search ranking is now performed in the cloud in a unified way, this class of tricky issues has disappeared.

More Powerful Algorithms

Today, state-of-the-art search systems use Machine Learning to deliver results. It was difficult to use these techniques in the old Roon Core, so one of the main goals of the re-architecture was to enable this.

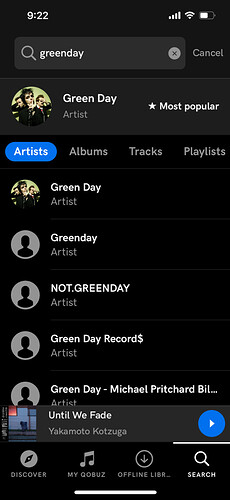

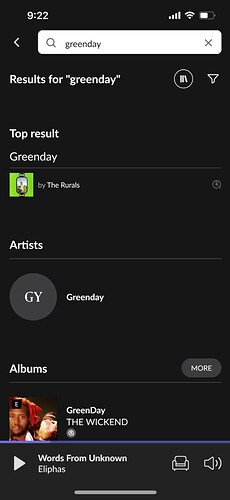

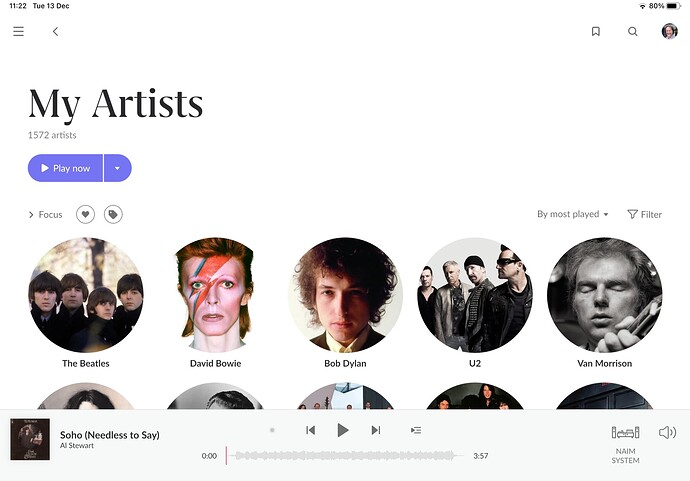

In the future, we will be able to tailor results to individual users. For example, a Beatles fan typing “John” into the search box probably expects “John Lennon” to be the top result, while a Jazz listener might expect “John Coltrane”.

This will also enable the implementation of modern search techniques like spelling corrections, search suggestions, semantic search, and gradient boosting, none of which are practical within the Roon Core.

Instant Search Improvements

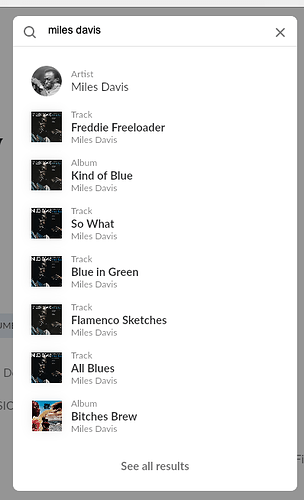

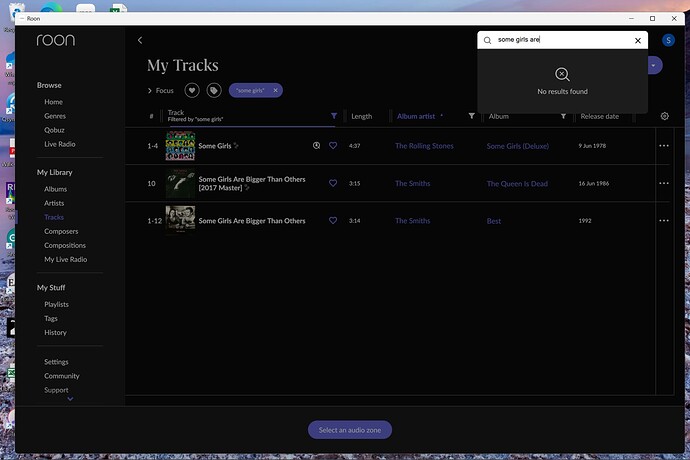

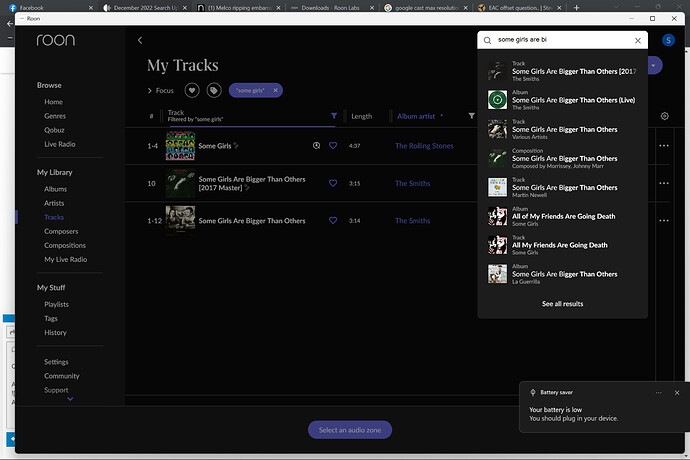

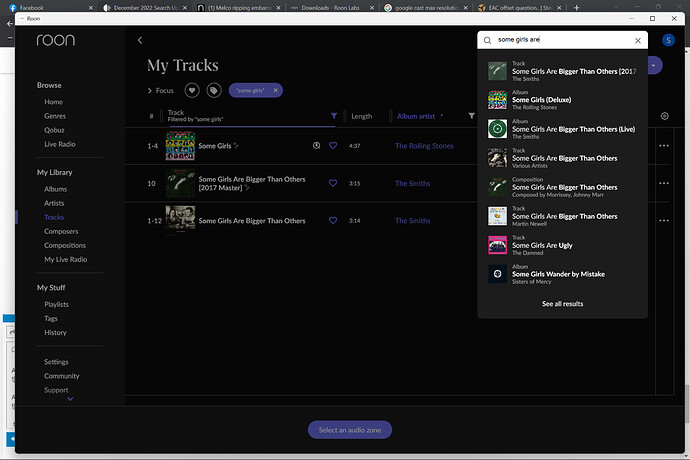

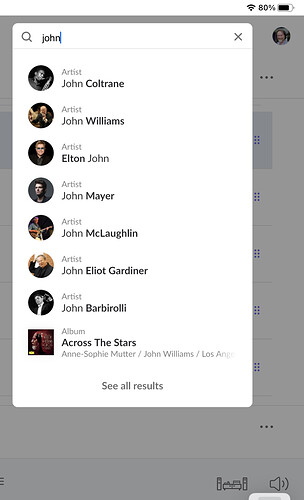

After deploying the new architecture internally in September, we started the process of tuning it to perform better than the old system. We focused on improving the instant search dropdown, making sure that fewer characters are required to reach the desired result, and that the results continue to feel sensible as more characters are entered.

In addition to automated testing, we have a weekly review process where the product and search teams come together to examine a set of 50 representative searches and discuss how the results changed because of the previous week’s work. This helps us understand the tradeoffs and make decisions that prioritize the user experience.

Dozens of Smaller Improvements

As part of this work, we made many of smaller improvements to the search system. Shorter queries like “john” or “pink” should now return more coherent results. The system is not directly auto-correcting misspellings, but it is more tolerant of misspelled terms in multi-word searches. It is also better at prioritizing exact matches, deduplicating similar results, and choosing the best version of an album or track when there are multiple versions available.

–

While things have definitely gotten better in the past year, 2022 was mostly about laying the groundwork. We plan to make faster and more visible progress in 2023 and beyond.

I want to thank everyone for their patience as we work on improving the search engine, and for all the feedback in last year’s thread. It has been very helpful in understanding our users’ perspectives, and we hope you will continue to use our product and provide feedback in the future.