Core Machine (Operating system/System info/Roon build number)

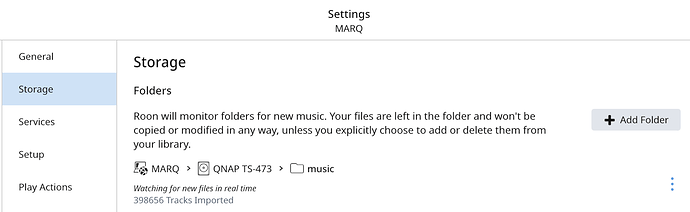

QNAP TS-473, AMD R-Serie RX-421ND Quad-Core 2,1 GHz CPU, 20GB RAM, running QTS 4.4.3,

Roon Database on SSD (using 10.6GB out of 400GB).

Roon Version 1.7 (build 667), QPKG-Version 2020-07-15

Media Library on NAS (around 57,000 tracks)

Qobuz Studio Sublime Subscription

Network Details (Including networking gear model/manufacturer and if on WiFi/Ethernet)

Unifi Managed Switch, Unifi AP-AC PROs, HP ProCurve Switches

Audio Devices (Specify what device you’re using and its connection type - USB/HDMI/etc.)

Linn Akurate DSM, Linn Majik DS, AirPlay-Devices

Description Of Issue

Dear support team,

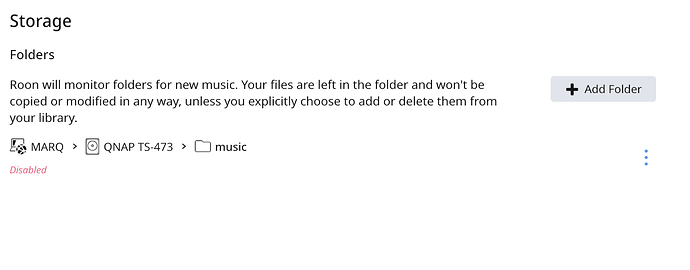

I have been enjoying Roon for 1 1/2 years now, however currently my setup has become close to unusable ever since I have updated to build 667. It all started around two weeks ago when I noticed the Roonserver process using up to 90% of the NAS CPU for a few minutes on a daily basis (system restarts every morning). I realized that the system was running a background audio analysis (indicating a very large number of files (around 350k vs. my local 57k files) - I assume that might now also involve Qobuz tracks).

However, despite the scan process was finally completed, my system has been instable ever since. When browsing for music or filling the queue, it randomly restarts and I am looking at a white screen with the roon logo, indicating the restart I assume. When the service is available again, it might work for a while or just restart with the next action I am taking. I have also experienced restarts while playing music!

The log shows errors such as:

Got a SIGSEGV while executing native code. This usually indicates a fatal error in the mono runtime or one of the native libraries used by your application.

Could not exec mono-hang-watchdog, expected on path ‘/share/CACHEDEV1_DATA/.qpkg/RoonServer/RoonServer/RoonMono/etc/…/bin/mono-hang-watchdog’ (errno 2)

I’ll be more than happy to share the total log with you (clearly indicating how often the problem actually occurs) and/or any additional information you might need.

Thank you so much for helping me out here!

Looking forward to hearing from you,

Thomas